ROS2 Cameras#

Learning Objectives#

In this example, we will learn how to

Add additional cameras to the scene and onto the robot

Add camera publishers in Omnigraph

Add camera publishers using the menu shortcut

Send ground truth synthetic perception data through rostopics

Getting Started#

Important

Make sure to source your ROS 2 installation from the terminal before running Isaac Sim. If sourcing ROS 2 is a part of your bashrc then Isaac Sim can be run directly.

Prerequisite

Completed ROS and ROS 2 Installation: installed ROS2, enabled the ROS2 extension, built the provided Isaac Sim ROS2 workspace, and set up the necessary environment variables .

It is also helpful to have some basic understanding of ROS topics and how publisher and subscriber works.

Completed tutorial on OmniGraph and Add Camera and Sensors

Completed URDF Import: Turtlebot so that there is a Turtlebot ready on stage.

Note

In Windows 10 or 11, depending on your machine’s configuration, RViz2 may not open properly.

Camera Publisher#

Setting Up Cameras#

The default camera displayed in the Viewport is the Perspective camera. You can verify that by the Camera button on the top left hand corner inside the Viewport display. Click on the Camera button and you will see there are a few other preset camera positions: Top, Front, and Right side views.

For the purpose of this tutorial, let’s add two stationary cameras, naming them Camera_1 and Camera_2, viewing the room from two different perspectives. The procedures for adding cameras to the stage can be found in Add Camera and Sensors.

You may want to open additional Viewports to see multiple camera views at the same time. To open additional Viewports: Window -> Viewport -> Viewport 2 to open the viewport, and select the desired camera view from the Cameras button on the upper left corner in the viewport.

Building the Graph for a RGB publisher#

Open Visual Scripting: Window > Visual Scripting > Action Graph.

Click on the New Action Graph Icon in middle of the Action Graph Window, or Edit Action Graph if you want to append the camera publisher to an existing action graph.

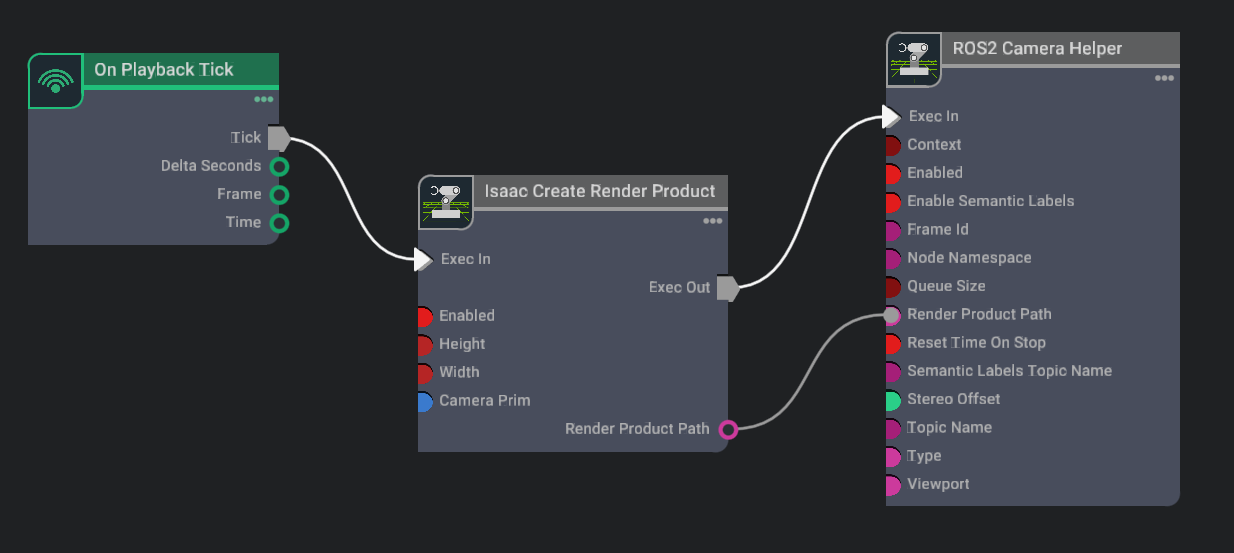

Build an Action Graph with the nodes and connection of the following image, and parameters using the table below.

Parameters:

Node

Input Field

Value

Isaac Create Render Product

cameraPrim

/World/Camera_1

enabled

True

ROS2 Camera Helper

type

rgb

topicName

rgb

frameId

turtle

Ticking this graph will automatically create a new render product assigned to Camera_1.

Graph Explained#

On Playback Tick Node: Producing a tick when simulation is “Playing”. Nodes that receives ticks from this node will execute their compute functions every simulation step.

ROS2 Context Node: ROS2 uses DDS for its middleware communication. DDS uses Domain ID to allow for different logical networks operate independently even though they share a physical network. ROS 2 nodes on the same domain can freely discover and send messages to each other, while ROS 2 nodes on different domains cannot. ROS2 context node creates a context with a given Domain ID. It is set to 0 by default. If Use Domain ID Env Var is checked, it will import the

ROS_DOMAIN_IDfrom the environment in which you launched the current instance of Isaac Sim.Isaac Create Render Product: Creating a render product prim which acquires the rendered data from the given camera prim and outputs the path to the render product prim. Rendering can be enabled/disabled on command by checking/unchecking the

enabledfield.Isaac Run One Simulation Frame: This node will make sure the pipeline is only ran once on start.

ROS2 Camera Helper: Indicating which type of data to publish, and which rostopic to publish it on.

Camera Helper Node

The Camera Helper Node is abstracting a complex postprocessing network from the users. Once you press Play with a Camera Helper Node connected, you may see that in the list of Action Graphs when you click on the icon on the upper left corner of the Action Graph window, a new one appears: /Render/PostProcessing/SDGPipeline. This graph is automatically created by the Camera Helper Node. The pipeline retrieves relevant data from the renderer, process it, and send them to the corresponding ROS publisher. This graph is only created in the session you are running. It will not be saved as part of your asset and will not appear in the Stage tree.

Depth and other Perception Ground Truth data#

In addition to RGB image, the following synthetic sensor and perceptual information also are available for any camera. To see the units used for each synthetic data annotator refer to omni.replicator.

Depth

Point Cloud

Before publishing the following bounding box and labels please look at the Isaac Sim Replicator Tutorials to learn about semantically annotating scenes first.

Note

If you would like to use the BoundingBox publisher nodes which are dependant on vision_msgs please ensure it is installed on the system or try Running ROS without a System Level Install.

BoundingBox 2D Tight

BoundingBox 2D Loose

BoundingBox 3D

Semantic labels

Instance Labels

Each Camera Helper node can only retrieve one type of data. You can indicate which type you wish to assign to the node in the dropdown menu for the field type in the Camera Helper Node’s Property tab.

Note

Once you specify a type for a Camera Helper node and activated it (i.e. started simulation and the underlying SDGPipeline has been generated), you cannot change the type and reuse the node. You can either use a new node, or reload your stage and regenerate the SDGPipeline with the modified type.

An example of publishing multiple Rostopics for multiple cameras can be found in our asset Isaac/Samples/ROS2/Scenario/turtlebot_tutorial.usd.

Camera Info Helper Node#

The Camera Info Helper publisher node uses the following equations to calculate the K, P, R camera intrinsic matrices.

Parameter calculations:

fx = width * focalLength / horizontalAperture

fy = height * focalLength / verticalAperture

cx = width * 0.5

cy = height * 0.5

K Matrix (Matrix of intrinsic parameters)

The K matrix is a 3x3 matrix.

K = { fx, 0, cx 0, fy, cy 0, 0, 1 }

P Matrix (Projection Matrix)

For stereo cameras the stereo offset of the second camera with respect to the first camera in x and y are denoted as Tx and Ty. These values are computed automatically if two render products are attached to the node.

For monocular cameras Tx = Ty = 0.

The P matrix is a 3x4 row-major matrix.

P = { fx, 0, cx, Tx, 0, fy, cy, Ty, 0, 0, 1, 0 }

R Matrix (Rectification Matrix)

The R matrix is a rotation matrix applied to align the camera coordinate system with the ideal stereo image plane, ensuring that epipolar lines in both stereo images become parallel. The R matrix is only used for stereo cameras and is set as a 3x3 matrix.

Graph Shortcut#

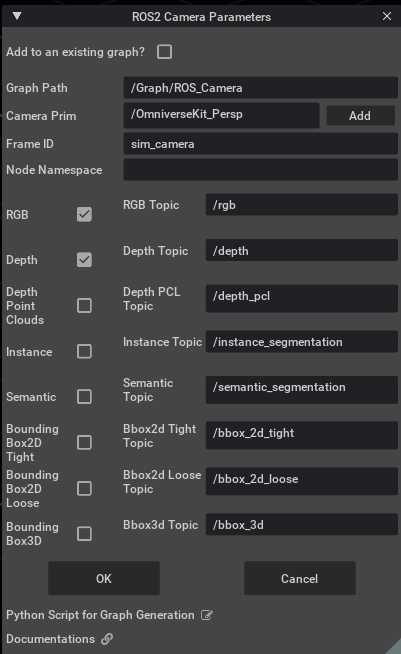

We provide a menu shortcut to build multiple camera sensor graphs with just a few clicks. Go to Isaac Utils > Common Omnigraphs > ROS2 Camera. (If you don’t see any ROS2 graphs listed, you need to first enable the ROS2 bridge). A popup box below will appear asking for the parameters needed to populate the graphs. You must provide the Graph Path, the Camera Prim, frameId, any Node Namespaces if you have one, and check the boxes for the data you wish to publish. If you wish to add the graphs to an existing graph, check the “Add to an existing graph?” box. This will append the nodes to the existing graph, and use the existing tick node, context node, and simulation time node if they exist.

Verifying ROS connection#

Use ros2 topic echo /<topic> to see the raw information that is being passed along.

We can visualize depth using the rqt_image_view method again: ros2 run rqt_image_view rqt_image_view /depth.

Note

In Windows 10 and 11, rqt_image_view is not available.

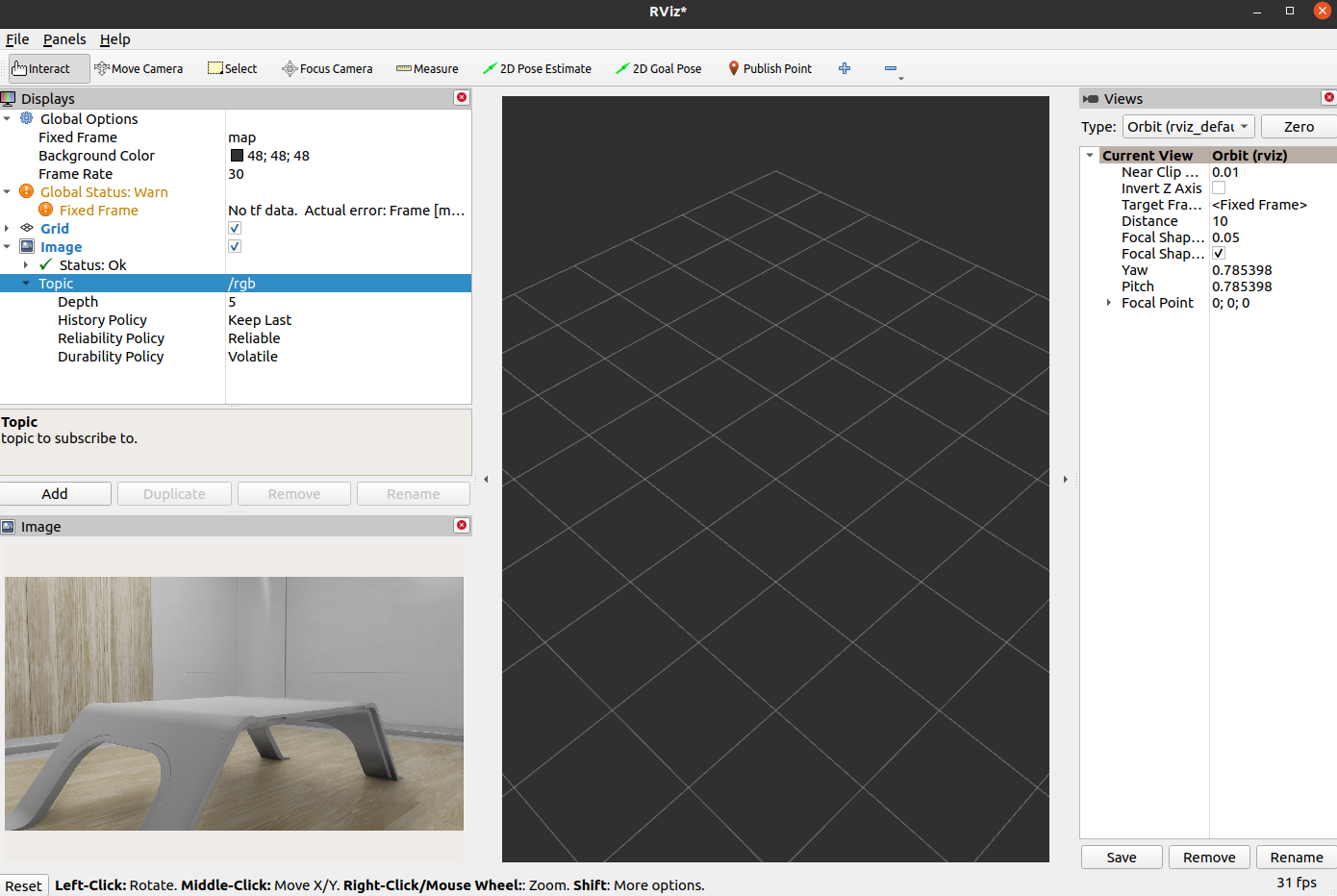

We will check the images published this time in RViz2. In a ROS2-sourced terminal, type in the command rviz2 to open RViz. Add a Image display type and set the topic to rgb.

Troubleshooting#

If your depth image only shows black and white sections, it is likely due to somewhere in the field of view has “infinite” depth and skewed the contrast. Adjust your field of view so that the depth range in the image is limited.

Additional Publishing Options#

To publish images on demand or periodically at a specified rate, you will need to use Python scripting. Go to ROS2 Camera for examples.

Summary#

This tutorial introduces how to publish camera and perception data in ROS2.

Next Steps#

Continue on to the next tutorial in our ROS2 Tutorials series, ROS2 Clock to learn to setup ROS2 Clock publishers and subscribers with Omniverse Isaac Sim.

Checkout Publishing Camera’s Data to learn how to publish camera’s data through Python scripting.

Further Learning#

Additional information about synthetic data generation can be found in the Replicator Tutorial Series.

Examples of running similar environment using Standalone Python workflow is outlined here.