Using AI Agents with MCP#

The RTX Remix Model Context Protocol (MCP) Server enables AI agents and Large Language Models (LLMs) to interact with the Toolkit. This allows for workflow automation, AI-powered feature integration, and Toolkit control through natural language commands.

See also

To jump straight into setting up a Remix AI Agent Assistant see the Connecting AI Agents with MCP section.

Background Information#

What is MCP?#

Model Context Protocol (MCP) is an open standard that provides a unified way for AI applications to interact with external tools and data sources. In the context of RTX Remix, MCP acts as a bridge between AI agents and the Toolkit’s functionality, exposing the REST API endpoints in a format that LLMs can understand and use through tool calling.

Key Benefits#

Natural Language Control: Interact with the Toolkit using conversational commands

Automation: Create AI-powered workflows to automate repetitive tasks

Integration: Connect the Toolkit with AI platforms and custom agents

Standardization: Use a widely-adopted protocol supported by multiple AI frameworks

Finding the MCP Server Information#

When the RTX Remix Toolkit starts, the MCP server automatically begins running with the following default configuration:

Protocol: Server-Sent Events (SSE)

Host:

127.0.0.1orlocalhostPort:

8000Endpoint:

http://127.0.0.1:8000/sse

Connecting AI Agents to MCP#

There are many frameworks that will allow AI Agents to connect to the RTX Remix MCP Server. This guide will go through the steps of connecting an AI Agent to the MCP Server with Langflow, but we also include some general steps for any MCP-Compatible client.

Using Langflow#

Langflow is a visual framework for building AI agents that supports MCP:

Important

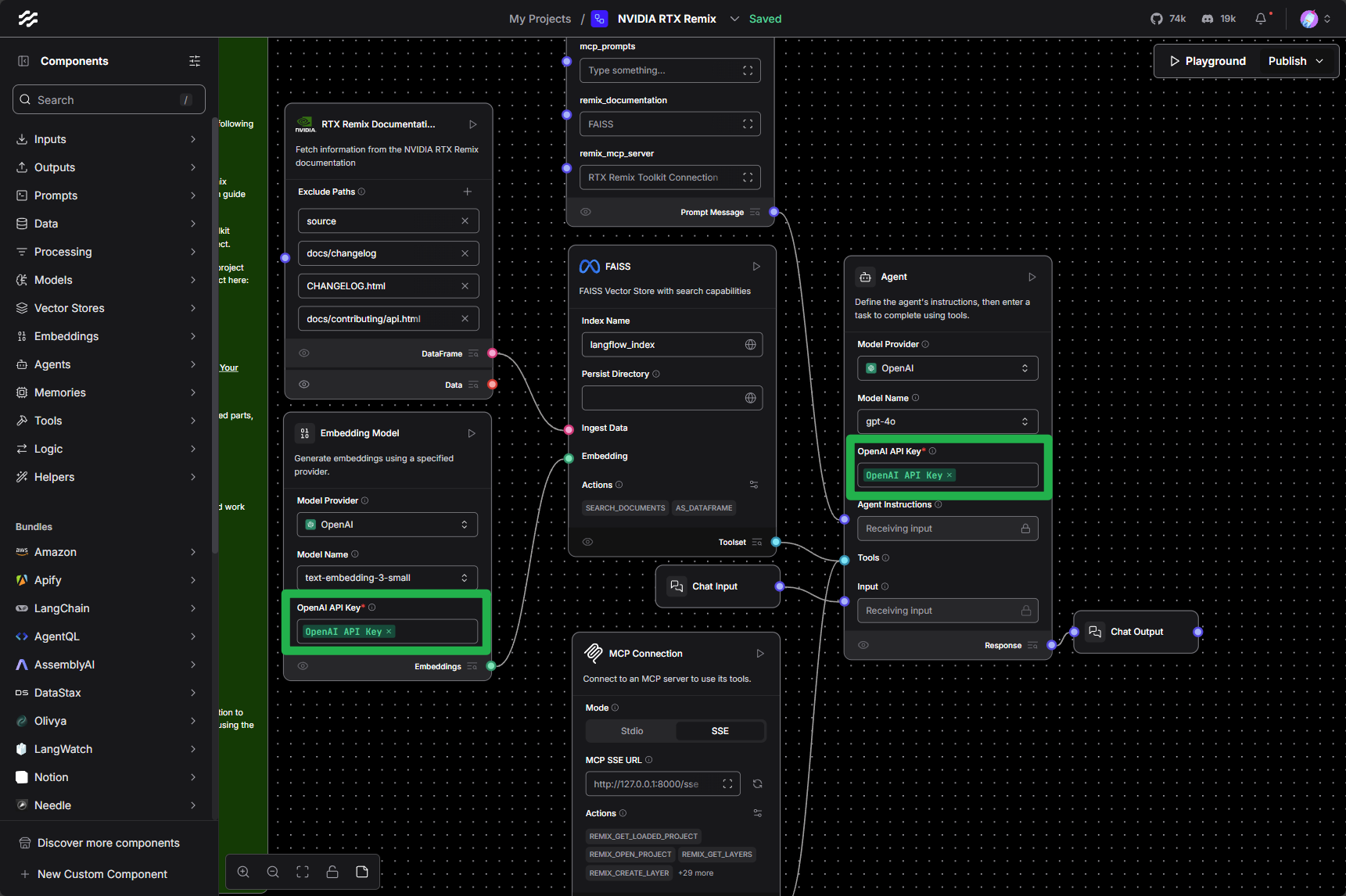

An OpenAI API key is required to use the NVIDIA RTX Remix Langflow template as-is, however, the template can be modified to use different LLM providers. See the Agent component documentation for more information on the Agent component.

Install Langflow by following the installation guide

Tip

Minimum version of the Langflow Desktop application required is

1.5.13.Minimum version of the Langflow PIP package required is

1.5.0.post2.

Launch the RTX Remix Toolkit

Launch Langflow and create a new flow in Langflow

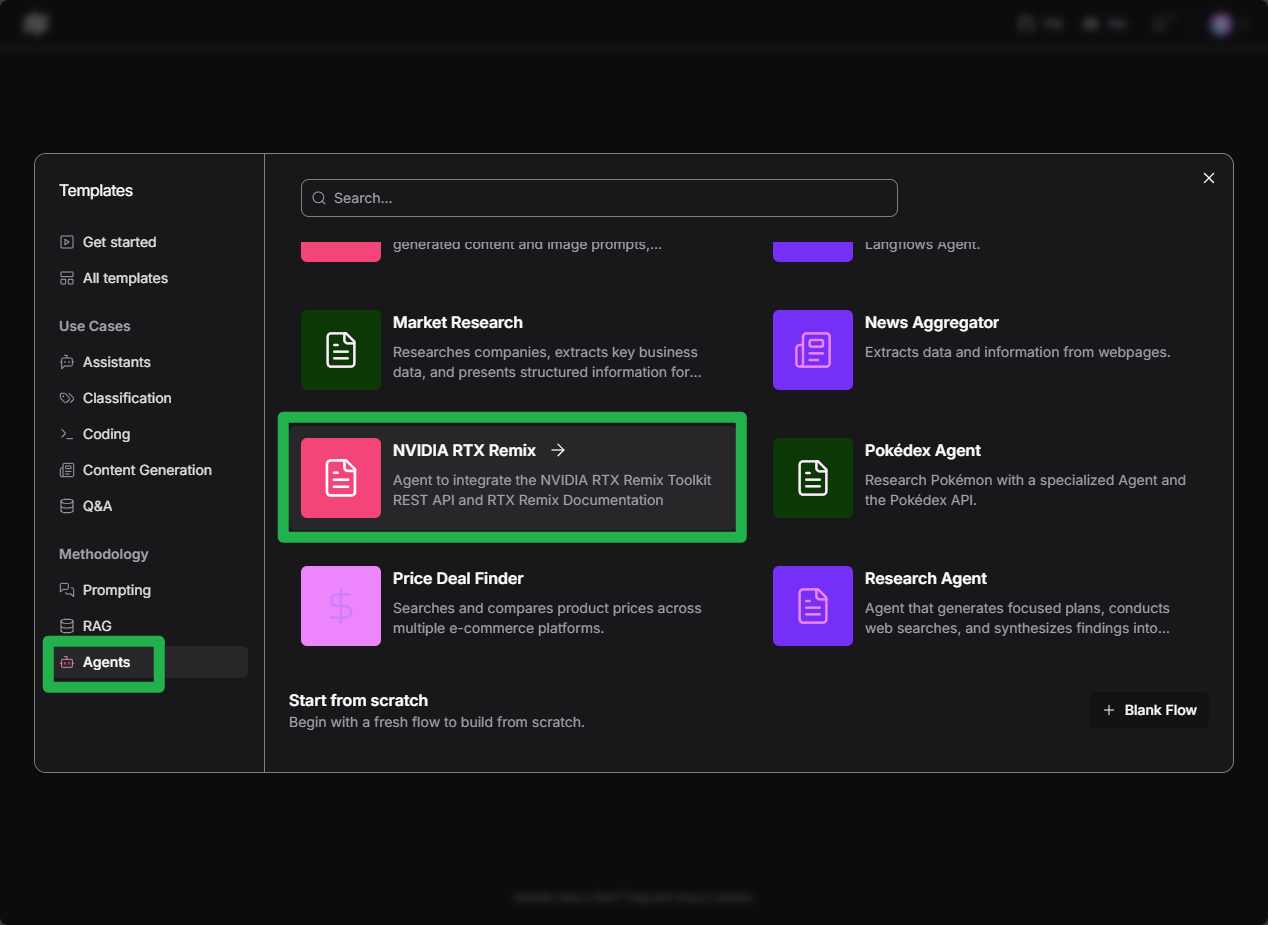

Select the

NVIDIA RTX RemixTemplate in the “Agents” section

Update the required API Keys. Using Langflow’s Environment Variables can simplify this process:

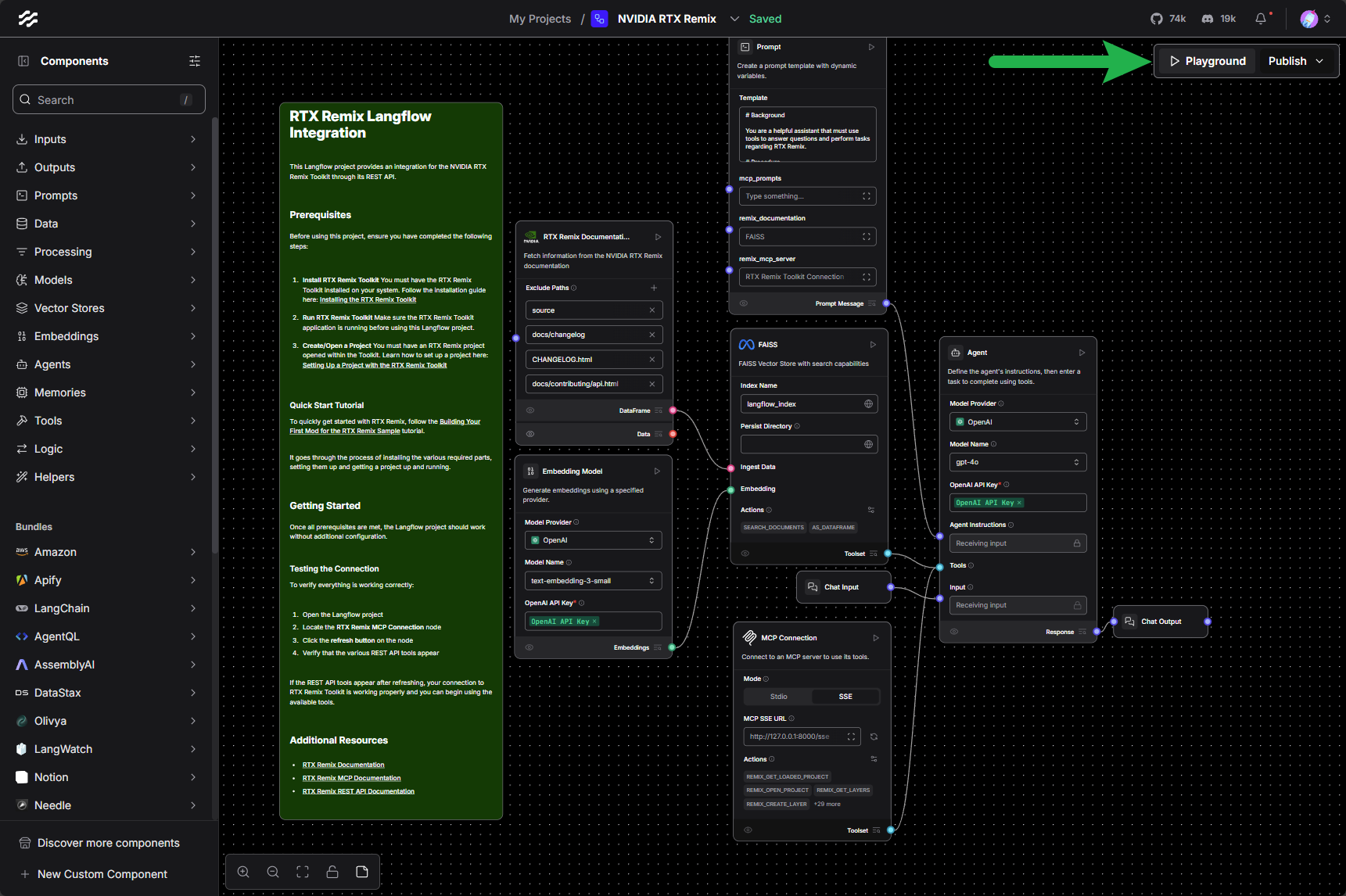

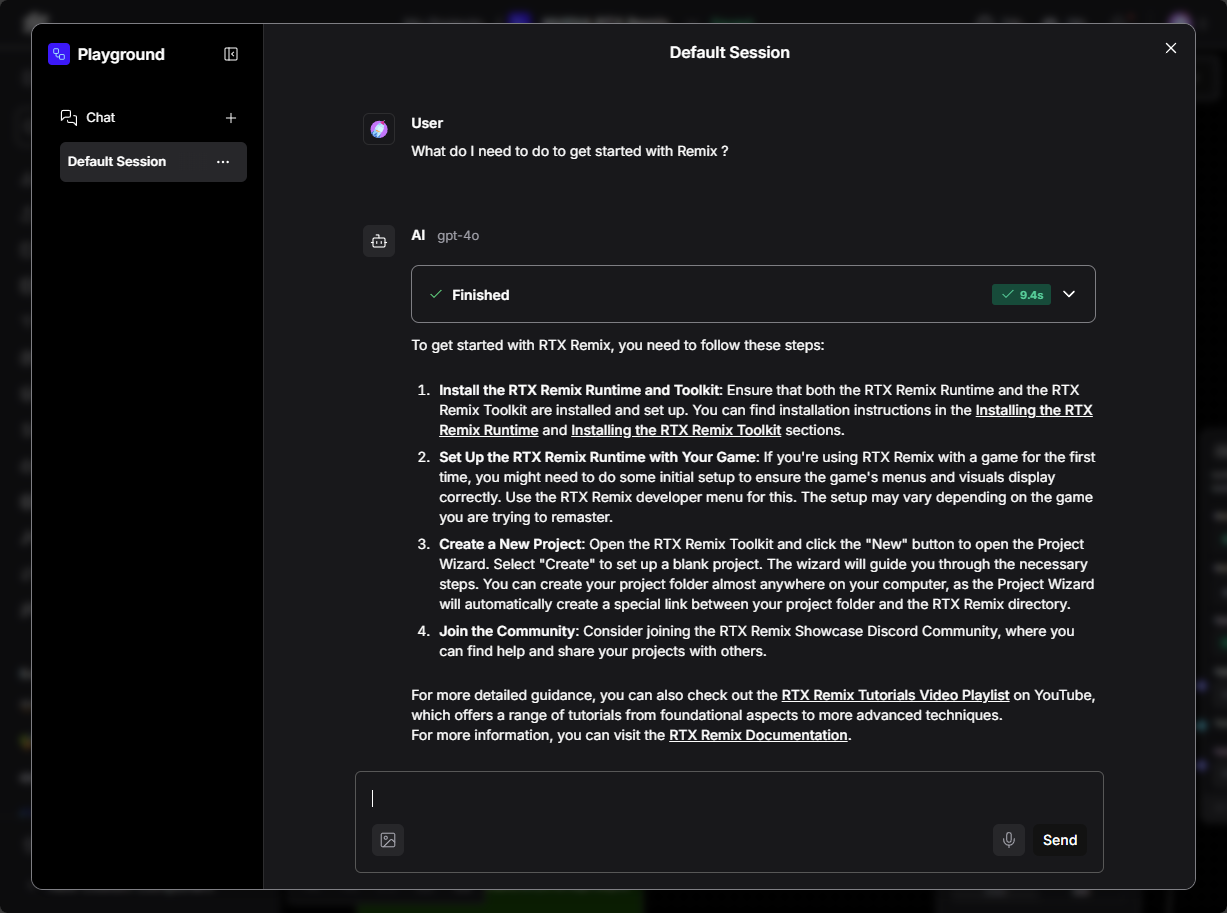

Test the flow by entering the “Playground”

Interact with the flow by entering a message in the “Input” field and clicking the “Send” button.

Tip

For troubleshooting flow issues, refer to the README node in the Langflow project for detailed information on template usage and common issue resolution.

Using MCP-Compatible Clients#

Many AI frameworks now support MCP. To connect them:

Configure the client with the SSE endpoint URL

Ensure the RTX Remix Toolkit is running

The client will automatically discover available tools through the MCP protocol

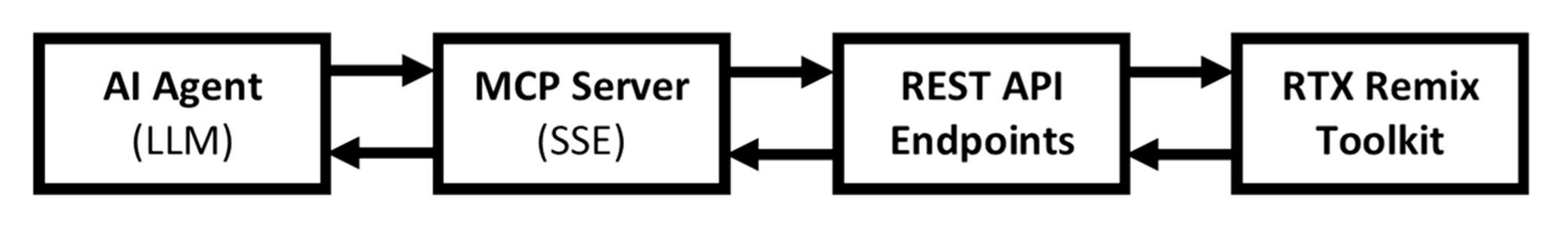

How MCP Works with RTX Remix#

The RTX Remix MCP server operates as follows:

Automatic Startup: The MCP server starts automatically when launching the RTX Remix Toolkit

REST API Translation: It translates the Toolkit’s REST API endpoints into MCP-compatible tools

Server-Sent Events (SSE): Uses SSE streams for real-time communication with AI agents

Tool Definitions: Provides structured descriptions of available actions that LLMs can understand

MCP Prompts: Defines reusable prompt recipes that guide agents through complex workflows

See also

See the REST API Documentation for more information on the RTX Remix Toolkit’s REST API.

Technical Architecture#

Building Complex Workflows#

MCP Prompts are predefined recipe templates that guide AI agents through multi-step workflows. These prompts provide structured instructions to the agent’s system context, specifying the exact sequence of operations and parameters needed to accomplish complex tasks.

How MCP Prompts Work#

MCP Prompts act as intelligent workflow templates that:

Define step-by-step procedures for common tasks

Specify which tools to use and in what order

Include parameter guidance for each operation

Handle conditional logic and error scenarios

Example: Asset Replacement Workflow#

When asking an agent to “replace the selected asset in the viewport,” the MCP Prompt guides the agent through:

Get Current Selection: Query the viewport to identify the currently selected asset

Retrieve Available Assets: List all ingested assets that could serve as replacements

Perform Replacement: Execute the replacement operation with the appropriate parameters

The prompt ensures the agent uses the correct API endpoints, passes the right arguments, and handles the response appropriately.

Benefits of MCP Prompts#

Consistency: Ensures agents follow best practices for common workflows

Efficiency: Reduces the need for lengthy natural language explanations

Reliability: Minimizes errors by providing explicit parameter specifications

Reusability: Allows sharing of workflow patterns across different AI agents

Troubleshooting#

Testing the MCP Server#

The MCP Inspector is the recommended tool for testing:

Make sure

Node.jsis installed. If not, following the instructions hereStart the RTX Remix Toolkit (ensures MCP server is running)

Launch MCP Inspector:

npx @modelcontextprotocol/inspectorConnect to the RTX Remix MCP server:

Click “Add Server”

Enter the SSE endpoint:

http://127.0.0.1:8000/sseClick “Connect”

Explore available tools, test commands, and view responses

Need to leave feedback about the RTX Remix Documentation? Click here