Audio2Face Tool

Use the Audio2Face Tool to drive facial animation with an audio source. This panel is composed of a collection of pipeline modules that contribute to the Audio to Facial animation process. Each instance of Audio2Face will have it’s own “Core” panel containing the settings for that particular instance. Audio2Face will load with the Full Face Core by default. See “multiple instances” below for more details.

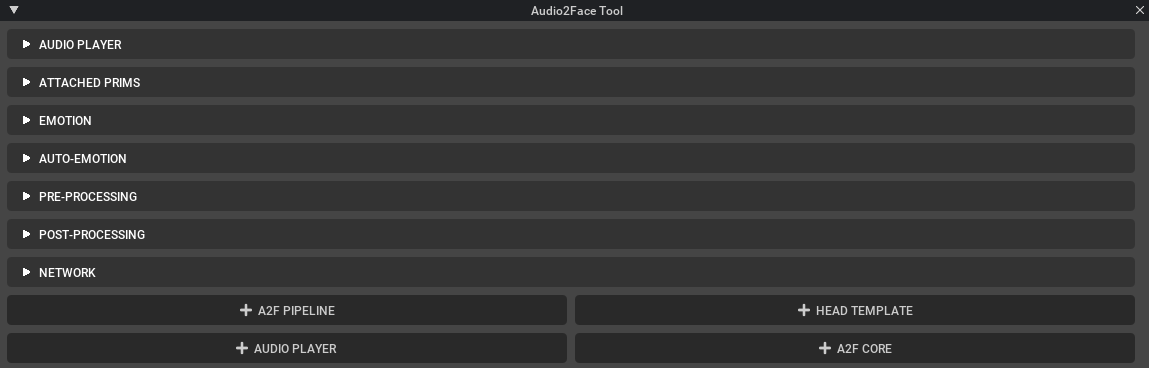

This panel is composed of the following widgets: