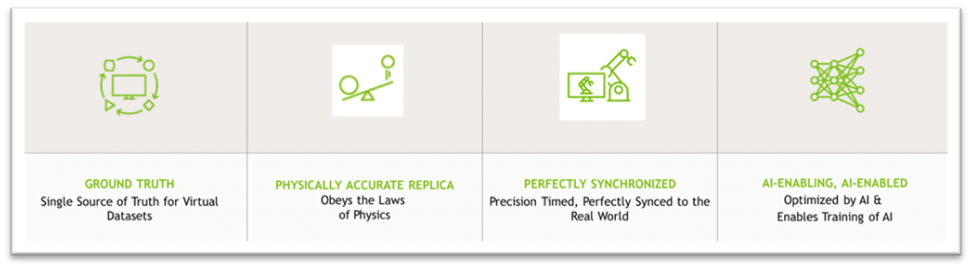

Attributes of a Live Digital Twin

Accurate and trustworthy virtual testing, experimentation, and optimization are possible only if a digital twin simulation include specific attributes. Those digital twin attributes include being the single source of truth for all virtual datasets, obeying the laws of physics, being perfectly synchronized to the real world, optimized by AI, and enabling the training of AI.

Using Omniverse Platform to build your warehouse digital twin, you can satisfy the required attributes as follows:

Ground Truth:

Built on open standards, Omniverse enables enterprises and developers to aggregate and connect 3D design and CAD (Computer Aided Design) applications to build and iterate upon a single source of truth for virtual datasets. Teams can also connect and extend to IoT, data systems, and industrial automation tools.

Physically Accurate Replica:

Omniverse digital twins are physically accurate with true-to-reality physics, materials, lighting, rendering, and behavior – powered by the Omniverse RTX Renderer. The Omniverse RTX Renderer is a physically based real-time ray-tracing renderer built on NVIDIA’s RTX technology, Pixar’s Universal Scene Description (USD), and NVIDIA’s Material Definition Language (MDL). It provides two render modes supporting fully dynamic lighting (without any light baking) with thousands of lights, millions of objects, and the flexible MDL material representation.

Physics in Omniverse is enabled by the NVIDIA PhysX SDK, you can add rigid-body dynamics to building blocks and topple them over; create ragdolls or simulate walking robots using articulations; build complex mechanisms using joints; or pour a jar of gummy bears into your scene using deformable-body simulation. Read more about the Physics Core here. For more advanced physics and simulation workflows which incorporate artificial intelligence, visit the Modulus extension.

Perfectly Synchronized:

Omniverse digital twins are true real-time, living simulations that operate at precise timing, where the virtual representation is constantly synchronized to the physical world. This enables enterprises to not only diagnose a single moment in time but accurately simulate and predict infinite “what-if” scenarios.

AI-Enabling, AI-Enabled:

Coupled with NVIDIA Isaac, Metropolis, cuOpt, Modulus, and more, enterprises can achieve an autonomous feedback loop between the real world and digital twin environments, constantly retraining and optimizing perception AIs like robots or conveyor belts, or, constantly run predictive “what-if” simulations to re-optimize the digital twin itself.