Carbonite Audio Playback Interface

Overview

The carb::audio::IAudioPlayback interface provides access to low level audio

processing, device and output management, and all audio processing systems. The playback

interface operates on sound data objects provided by the carb::audio::IAudioData

interface. The playback interface does not directly interact with the carb::audio::IAudioGroup

interface, but can accept the play descriptors generated from it.

Contexts

Audio playback starts with creating a carb::audio::Context object. This encapsulates

all state and functionality of a single instance of audio playback. Multiple contexts can exist

at the same time and will function independently. Almost all operations in the playback interface

operate on either a context object or something derived from a context (ie: a carb::audio::Voice).

Creating a new context should only need to be done once on startup of an app. Once created,

it can be adjusted as needed to meet the app’s needs.

There are two ways to create a new context - a default context, or by providing a descriptor

of how to create the context. If no descriptor is passed to the carb::audio::IAudioPlayback::createContext

function, a default context will be created. This context will target the system’s default output device,

will have a reasonable number of buses to play voices on, and will not have any context level

callback functionality. If a descriptor is given, all features of context creation can be

customised as needed for the app. The most common uses for this would be to select a different

output device than just the system’s default device.

Multiple contexts can exist simultaneously. They could each target a different output device or more than one could target the same output device. For the most part, having multiple contexts target the same device for output should work. The one possible exception to this would be Linux hardware devices since these require exclusive access. All other devices (including Linux ‘dmix’ devices and all Linux PulseAudio devices) should be able to share their output between multiple contexts. There is a limit on the number of contexts that can exist simultaneously however. This is currently 16. Each context comes with its own set of resources and processing requirements, so it is best to try to keep the number of independent simulataneous contexts as low as possible.

Selecting a Device

The playback devices attached to the system can be enumerated through the carb::audio::IAudioPlayback

interface. Doing so doesn’t require that a context be created first. Each device is assigned

an index value starting at 0. Device 0 will always be the system’s default playback device.

All other devices will be given increasing contiguous indices. There will never be a gap in

the index list even if a device is removed from the system. The enumeration order will remain

stable as long as no devices are added to or removed from the system, however the ordering

within the list is determined by the system itself and may not be sorted in any way (except

for the default device being at index 0).

Each device has a set of capabilities and information associated with it. These are

retrieved with the carb::audio::IAudioPlayback::getDeviceCaps function in the

carb::audio::DeviceCaps struct. The device capabilities include the device’s

name, unique ID, and its preferred data format (ie: channel count, frame rate, and sample type).

The common use case for this information is to expose it to the user to allow them to select a

specific playback device. Other possible uses would be to attempt to find the device that closest

matches a preferred channel layout (ie: 5.1 surround sound instead of just stereo).

The system’s audio device list is highly volatile. It can change at any time completely outside the control of the host app. This occurs when a sound device is added to or removed from the system (or both). This can happen from user actions such as installing or updating drivers, connecting or disconnecting USB or Bluetooth devices, connecting or disconnecting a gamepad that has an embedded audio device, or even the batteries on a wireless audio device dying and the device being lost from the system. When enumerating devices, it is best not to cache the device capabilities or even the device count, but to always fully enumerate devices any time their information is needed.

Once a suitable device has been found or chosen by the user, it may be set as active using

either carb::audio::IAudioPlayback::createContext to create a new context object

that uses the new device as an output, or by calling carb::audio::IAudioPlayback::setOutput

to dynamically change the output of an existing context object. In both cases, it is possible

that opening the requested device could still fail (ie: it was removed from the system between

enumerating and opening). Opening the device unfortunately must be done asynchronously for

safety so its success or failure is not reflected in the return value of either

carb::audio::IAudioPlayback::createContext or carb::audio::IAudioPlayback::setOutput.

In order to verify whether the device was successfully opened, the context’s capabilities object

can be retrieved with carb::audio::IAudioPlayback::getContextCaps. This will show the

information for the currently selected device and output. If the carb::audio::DeviceCaps::flags

member of the carb::audio::ContextCaps::selectedDevice object is set to

carb::audio::fDeviceFlagNotOpen, the device was not successfully opened and a new

output device will need to be selected eventually. The context is still fully valid and will

process its data as expected even if it is not able to output to a device.

Streamers

As an alternative or addition to the output devices, a context’s output can be sent to one or more streamer objects. These take a continuous stream of data and perform a host app specified operation on them. Streamer objects must be implemented, created, and managed by the host app. There is no requirement for how they must process their data. The most common usage of a streamer would be to dump the stream of processed audio to a file as it is produced. However, the streamer may be used for other purposes such as sending the data over a network for playback elsewhere, providing access to a proprietary output device that is not known to the system, or even sending the output to a voice to use as its input.

Multiple streamer objects may be attached to the output simultaneously. There is no hard limit to the number of streamers that can be attached. However, there is a processing limit that the host app must consider when attaching multiple streamers. If any of the streamers are targeting output devices or an output device is also being targeted by the context, all of the streamers must process their data fast enough that the device(s) are not starved of data. This generally means that all streamers must process their data in under 5ms total. Failing to do so will introduce a glitch into the device output. If no output devices are being targeted, the streamers are free to take as long as they need to process their data.

All of the outputs, including all streamers and playback devices, are together responsible for managing the processing rate of the context. The return values from their write functions determine when the context’s next cycle is run. However, all outputs must work together to ensure that the context continues producing data at an appropriate rate. Effectively, the most severe return code from all outputs will determine when the context runs its next cycle. If one of the outputs is close to starving and returns a ‘critical’ code, the context will run a new cycle immediately regardless of what any other outputs return.

Another common use for a streamer is in a baking context. This kind of context does not output to a playback device, but instead only outputs to one or more streamers. The context will run its cycles as fast as it can (as instructed by the streamer) and the context’s running state can be explicitly managed by the host app. The context’s engine may be started as needed by the host app and will stop on its own when the last voice finishes playing. This type of context can be used to ‘pre-bake’ expensive effects into sounds to use in a scene.

Sound Data Objects

As described in the IAudioData interface, all sound data objects are

managed independently of the carb::audio::IAudioPlayback interface. This

means that a single sound data object may be freely shared between multiple playback

contexts. Since sound data objects are reference counted, they will not be destroyed

until the last context finishes playing them. Aside from managing its own references

to the them, the context objects do not have any management oversight on sound data objects.

A sound data object defines its own ‘instance count’ value. This instance count is

enforced by the playback context object. If a request to play a sound determines that

the sound is already at or above its instance limit, it will either fail to play

or be immediately put into ‘simulation’ mode as a virtual voice. This behaviour

depends on whether the carb::audio::fPlayFlagMaxInstancesSimulate flag

is used in the play descriptor. This instance count behaviour is useful to limit

the number of fire-and-forget instances of a sound being played simultaneously.

Adding one more instance of the sound won’t necessarily enhance the auditory scene,

but would cost extra processing time.

Voices

A voice represents a playing instance of a sound data object. A voice handle can be

obtained from the return value of carb::audio::IAudioPlayback::playSound.

The voice handle can then be used to operate on the playing instance of the sound as

it plays. The operations include updating emitter parameters, changing volume, changing

frequency ratio, etc. These operations will only be valid while the voice is still

playing. After the voice finishes, any attempt to change its parameters will simply be

ignored.

A voice handle is a throw-away object. It does not need to be destroyed or released

in any way. If it is not needed, for example in a fire-and-forget sound, it can

simply be leaked from the carb::audio::IAudioPlayback::playSound call.

Voice handles will be reused internally as needed. It is possible that a cached voice

handle may be reused and become valid again at some point. However, even if 4096

1-millisecond sounds were being played constantly, it would still take ~4.6 hours for

that to occur. In real use situations, voice handle reuse would be more on the scale

of months to years.

There is a hard limit of 16,777,216 voices that can be playing simultaneously in a

sound scene. Once this limit is reached, attempts to play another sound will fail.

However, processing that many voices simultaneously would be a daunting task for

even the most powerful modern computers. Each context object has a limited number

of voices that it can process and play simultaneously as well. This limit is defined

by the maximum number of buses that the context supports. This bus count is set

either when creating the context or by calling carb::audio::IAudioPlayback::setBusCount.

The context will try to prioritize the playing of the most audible or important voices on the buses. All other voices will be simulated. If there are fewer voices than there are buses, all audible voices will be played. If there are more voices than buses, the most important voices will be brought onto buses and the others will be simulated. The set of voices that are assigned to buses may change as the scene progresses, especially as spatial audio parameters change (ie: the listener or emitters change position).

A voice created from one context cannot be confused with a voice from another

context. The functions in carb::audio::IAudioPlayback that take a

voice as a parameter will always affect the output of only the context that originally

created the voice.

Speaker Layouts

When creating a context (carb::audio::IAudioPlayback::createContext) or changing

the output (carb::audio::IAudioPlayback::setOutput), a new speaker layout may be

chosen. This takes the form of a speaker mask. The mask is a set of bits that indicate which

predefined channels are present in the layout. The order of the channels in each frame depends

on SMPTE standard order. Some speaker masks for the SMPTE defined standard layouts are also

defined in the :cpp:var`carb::audio::SpeakerMode` flags. Beyond 16 channels the speaker masks

move into the realm of custom speaker layouts. In these cases, it is the host app’s responsibility

to specify where each speaker is located.

If one of the standard speaker layouts doesn’t suit the needs of the host app, there is

still the possibility of creating a custom speaker layout. There is the ability to set

the user relative directions of each of the speakers in the layout with a call to

carb::audio::IAudioPlayback::setSpeakerDirections. This allows an explicit direction

to be specified for each speaker. Speakers are always treated as being located on the unit sphere

with the user at the origin. The directions to each of the speakers is used to position them

in the virtual world around the listener in order to more accurately simulate spatial audio.

The carb::audio::IAudioPlayback interface support multi-layered speaker layouts

as well. Many of the older SMPTE standard speaker layouts such as 5.1, 7.1, etc only had speakers

that existed on the XY plane around the user (ie: the plane parallel to the floor). Some newer

standard speaker layouts provide multiple planes of speakers such as 7.1.4. These formats have a set

of speakers on the XY plane (8 in the case of the 7.1.4 layout), and more speakers on another

plane higher up on the unit sphere. Custom speaker layouts can also make use of speakers in

higher or lower planes by providing a non-zero top/bottom position in the speaker directions

descriptor.

Spatial Audio

Every voice has the possibility to be either a spatial voice or a non-spatial voice. This

mode is selected in the play descriptor when carb::audio::IAudioPlayback::playSound

is called. Once the mode has been set, it is possible to switch it by updating the voice’s

playback mode, but the results may be undefined. However, a spatial voice can be shifted to

behave as a non-spatial voice by adjusting it’s ‘non-spatial mix level’ parameter. This

parameter can be used to transition between a spatial and non-spatial voice and back as needed.

All spatial voices require that their emitter parameters be updated as needed. If the emitter moves within the virtual world, its position and orientation must be updated as well. Similarly, if the listener moves within the virtual world, the context must have its listener parameters updated. Without these parameter changes, spatial audio changes in the virtual world cannot properly be tracked and it will appear as though the emitter is not moving relative to the listener.

Each spatial voice has its own attenuation range associated with it. This is the full range over which it can be heard. If the listener is outside of its range, sound from that voice will not be heard. While the listener is within the attenuation voice’s range, its sound will be heard but the volume will be adjusted according to the distance between the two. By default, a linear square rolloff model is used. This matches the physical wavefront energy loss model. Different rolloff models can be used as well as a custom rolloff model where a custom piece-wise curve is provided by the host app.

When the listener is not within the attenuation range of a voice, there is a possibility that the voice could become simulated. When in simulation mode, no audio data is processed for the voice, just its simulated play position is tracked based on elapsed time. When the voice becomes real again (ie: the listener enters its range), it will resume at the position that it should be at given the simulation of its position. Note that the host app is free to specify that some of its voices may use the playback mode that prevents their play position from being simulated. In these cases, the voice’s sound will simply restart when the listener comes back into its range. This is the default case for sounds that are infinitely looping (ie: a repeating machine noise, chirping bird, etc). It generally doesn’t benefit the scene to have the extra processing expense of tracking their position when it doesn’t generally matter to the scene’s integrity.

Event Points

Each sound data object can include one or more optional event points. An event point is a frame in the sound’s data where some arbitrary event is expected to occur. The event point object itself contains a frame index, a name or label, an optional block of text, and information about an optional region in the sound. Some common uses for event points would be to provide closed captioning text for some dialogue, or to synchronize world events to cues in the sound track. The optional block of text in the event point can be used to contain the closed captioning text for example. Some file formats such as RIFF allow this text to be embedded in the file itself and can be automatically parsed into event points on load.

When the carb::audio::IAudioPlayback interface is playing a sound that has

event points enabled, each time it processes a frame that contains an event point, a callback

will be triggered on the voice (provided a callback is given). The host app can then do

whatever it needs to do to handle the event point.

When the callback is performed, it will be in the context of the audio processing engine thread. The callback must execute as quickly as possible otherwise it will interrupt the processing engine. It is the best practice to flag that the event point occurred and handle its response later in another thread.

Each event point object can also contain a user data object. This can be used to attach an app specific object to each event point to provide extra information. This extra information will be delivered with the event point callback when it is performed and be used to access host app specific information directly. The user data object can be given an optional destructor function to ensure it is cleaned up when it is either replaced or the sound data object is destroyed.

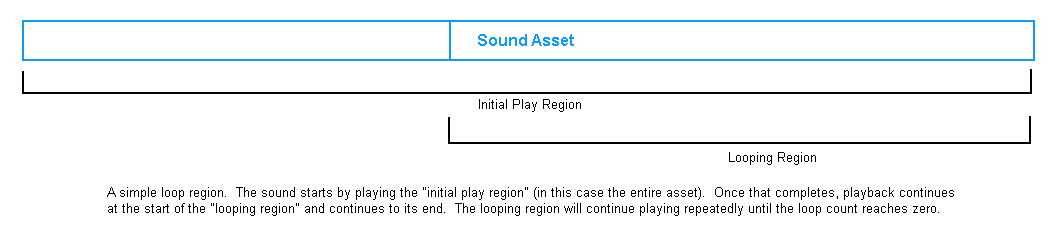

Loops

A loop point is just a special case of an event point. The loop point can either be part

of the sound data object itself or be specified explicitly. A loop point is set either

when first playing the sound with carb::audio::IAudioPlayback::playSound,

or by calling carb::audio::IAudioPlayback::setLoopPoint.

The only difference between a loop point and an event point is that the loop point will

also have an optional length value specifying the region to play and a loop count.

However, any event point can still be used as a loop point with a zero length meaning

that the remainder of the sound will be played for the loop region, and a zero loop

count meaning that the loop region will be played only once.

The frame stored in the loop point indicates the point to jump to when the voice’s original play region completes. After that, playback will continue beginning at the loop point’s frame and continuing through its specified length. A loop point can also contain a loop count to allow it to repeat a specific number of times. The loop count is the number of times the loop region should repeat itself. For example, a loop count of zero means the region will play once and not repeat, while a loop count of 1 means it will play twice in total.

A loop region can be anywhere in the asset. It does not necessarily need to overlap the initial play region. The loop region does not even need to be contiguous in the asset as the initial play region. A common use for a simple looping region would be to play a repeating sound that has an initial ‘start up’ portion with it. An example of this might be an engine sound where the initial play region is the engine being started, then the looping portion of it is the engine rumbling sound.

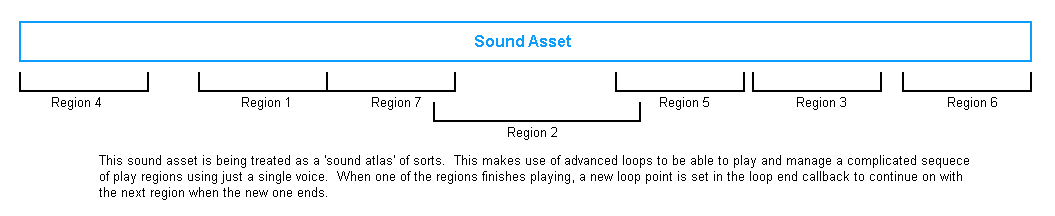

Advanced loops can be performed by changing the loop point in the

carb::audio::VoiceCallbackType::eLoopPoint callback event.

The next time the loop point region completes, it will continue with the new loop region

of the sound. This can effectively be used to map out a ‘playlist’ in a large sound.

Some file formats such as RIFF allow this playlist information to be stored inside them.

When these files are loaded, the playlist order will be stored in the

carb::audio::EventPoint::playIndex member of the carb::audio::EventPoint

object. The sound’s event points that are part of the playlist can be enumerated using

carb::audio::IAudioData::getEventPointByPlayIndex. Most apps will not

require the use of advanced looping or playlist features.

A loop point is created either when playing a sound with carb::audio::IAudioPlayback::playSound,

or by setting a new one on a voice explicitly with carb::audio::IAudioPlayback::setLoopPoint.

In both cases, a carb::audio::LoopPointDesc descriptor is used. This allows the loop

point information to be specified in one of a few ways:

specified as an index in the sound data object’s event point table. This would be the event point that is returned by passing the same index to

carb::audio::IAudioData::getEventPointByIndex. This access mode is only used when no loop point object is specified in the descriptor.by retrieving an event point object from the sound data object and setting it in the descriptor.

by manually creating an event point object with the loop region information and setting it in the descriptor. In this case loop point’s information will be shallow copied into the voice’s parameter block. If the constructed loop point has text associated with it, it is the caller’s responsibility to ensure that text remains valid until the loop point is hit.

A loop point can be set at any time on a voice, but is generally safest inside a loop end callback. If a new loop point is set at any other time, there may be a race between the new loop point being set and the current play region completing. In such a case, it is possible that the previous play or loop region could repeat one extra time before the new loop region is played.

Typical Usage

Typical usage of the carb::audio::IAudioPlayback interface would take the

following steps:

create a new context with

carb::audio::IAudioPlayback::createContext. This context object will be used to manage all state for audio playback.load or create some sound data objects with the

carb::audio::IAudioDatainterface. These objects will need to be released by the host app when they are no longer needed.add a call to

carb::audio::IAudioPlayback::updateto the app’s main loop. This performs several operations including updating voices that are being simulated, recycling freed voice handles, updating voice and bus parameters, performing queued callbacks, etc. Some operations will still work without calling this, but the results will generally be undefined.play sounds as needed with one or more calls to

carb::audio::IAudioPlayback::playSound. This will create voice handles to track the playing state of each sound.destroy the context when all playback is complete.

clean up all sound data objects that were loaded.