Spatial Streaming for Omniverse Digital Twins Workflow#

In this guide you will learn how to use Omniverse to author OpenUSD stages that can be streamed to a client application running on the Apple Vision Pro or iPad Pro. Additionally, you will learn how to create these front end clients that run natively on the Vision Pro or iPad Pro with Swift and SwiftUI.

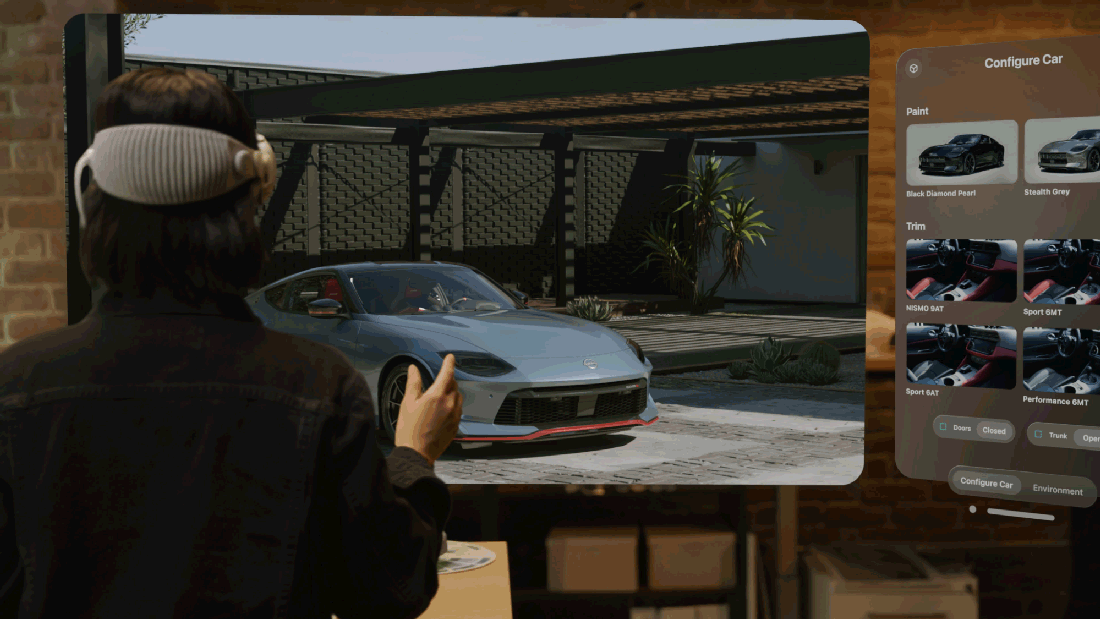

When used together, you can create state-of-the-art hybrid applications that take advantage of the unique device and platform capabilities of the Apple Vision Pro and iPad Pro while allowing users to interact with an accurate simulation of a digital twin.

Note

If you are a developer coming from the CloudXR Framework 1.0 release, we have refactored some methods for how we set up, send and receive client events. Please see the Client/Server Overview and Add New Functionality section for more details.

We will demonstrate how to deploy your OpenUSD content to NVIDIA’s Graphics Delivery Network (GDN), enabling visionOS users to experience your digital twin without requiring a powerful workstation.

Creating hybrid applications for Apple Vision Pro involves implementing multiple SDKs and establishing communication between platforms. Omniverse handles rendering and data manipulation, while the Vision Pro sends inputs (head pose, JSON events) to the Omniverse server. These inputs trigger scene logic created using ActionGraph and Python, allowing real-time updates to the stage like changing prim variants or user locations.

The client side uses native visionOS gestures and SwiftUI for interaction. Custom UI elements (like leather swatch thumbnails) trigger commands that the Omniverse server processes with low latency.

Development can be done locally using a workstation, Mac, and Vision Pro. For production deployment, package your USD data into a zip file and publish to GDN, where a specific XR machine configuration is available for streaming to Vision Pro users.

This example is made up of several consecutive steps:

Build an Omniverse Kit application to open and stream your USD content, and verify that our Kit application is ready to stream to the headset.

Build the Purse Configurator visionOS application and simulate it in Xcode.

Establish a connection between the visionOS application and the Omniverse Kit application.

Learn how to communicate between the server and client, and add a new feature to the experience.

Rebuild and test the new feature on the device.