Viewport API#

The ViewportAPI object is used to access and control aspects of the render, who will then push images into the backing texture.

It is accessed via the ViewportWidget.viewport_api property which returns a Python weakref.proxy object.

The use of a weakref is done in order to make sure that a Renderer and Texture aren’t kept alive because client code

is keeping a reference to one of these objects. That is: the lifetime of a Viewport is controlled by the creator of the Viewport and

cannot be extended beyond the usage they expect.

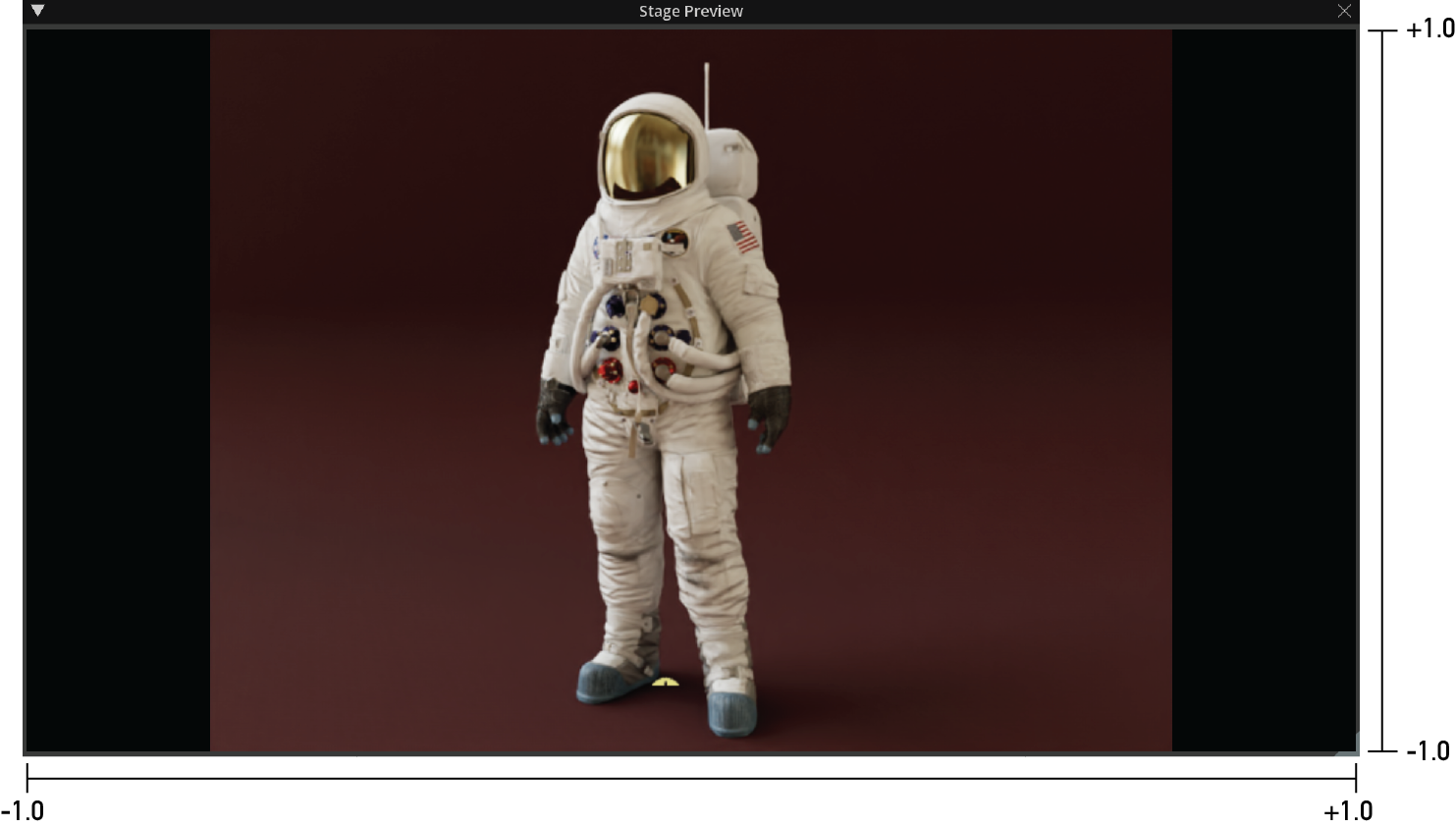

With our simple omni.kit.viewport.stage_preview example, we’ll now run through some cases of directly controlling the render,

similar to what the Content context-menu does.

from omni.kit.viewport.stage_preview.window import StagePreviewWindow

# Viewport widget can be setup to fill the frame with 'fill_frame', or to a constant resolution (x, y).

resolution = 'fill_frame' or (640, 480) # Will use 'fill_frame'

# Give the Window a unique name, the window-size, and forward the resolution to the Viewport itself

preview_window = StagePreviewWindow('My Stage Preview', usd_context_name='MyStagePreviewWindowContext',

window_width=640, window_height=480, resolution=resolution)

# Open a USD file in the UsdContext that the preview_window is using

preview_window.open_stage('http://omniverse-content-production.s3-us-west-2.amazonaws.com/Samples/Astronaut/Astronaut.usd')

# Save an easy-to-use reference to the ViewportAPI object

viewport_api = preview_window.preview_viewport.viewport_api

Camera#

The camera being used to render the image is controlled with the camera_path property.

It will return and is settable with a an Sdf.Path.

The property will also accept a string, but it is slightly more efficient to set with an Sdf.Path if you have one available.

print(viewport_api.camera_path)

# => '/Root/LongView'

# Change the camera to view through the implicit Top camera

viewport_api.camera_path = '/OmniverseKit_Front'

# Change the camera to view through the implicit Perspective camera

viewport_api.camera_path = '/OmniverseKit_Persp'

Filling the canvas#

Control whether the texture-resolution should be adjusted based on the parent objects ui-size with the fill_frame property.

It will return and is settable with a bool.

print('viewport_api.fill_frame)

# => True

# Disable the automatic resolution

viewport_api.fill_frame = False

# And give it an explicit resolution

viewport_api.resolution = (640, 480)

Resolution#

Resolution of the underlying ViewportTexture can be queried and set via the resolution property.

It will return and is settable with a tuple representing the (x, y) resolution of the rendered image.

print(viewport_api.resolution)

# => (640, 480)

# Change the render output resolution to a 512 x 512 texture

viewport_api.resolution = (512, 512)

SceneView#

For simplicity, the ViewportAPI can push both view and projection matrices into an omni.ui.scene.SceneView.model.

The use of these methods are not required, rather provided a convenient way to

auto-manage their relationship (particularly for stage independent camera, resolution, and widget size changes),

while also providing room for future expansion where a SceneView may be locked

to last delivered rendered frame versus the current implementation that pushes changes

into the Renderer and the SceneView simultaneously.

add_scene_view#

Add an omni.ui.scene.SceneView to the list of models that the Viewport will notify with changes to view and projection matrices.

The Viewport will only retain a weak-reference to the provided SceneView to avoid extending the lifetime of the SceneView itself.

A remove_scene_view is available if the need to dynamically change the list of models to be notified is necessary.

scene_view = omni.ui.scene.SceneView()

# Pass the real object, as a weak-reference will be retained

viewport_api.add_scene_view(scene_view)

remove_scene_view#

Remove the omni.ui.scene.SceneView from the list of models that the Viewport will notify from changes to view and projection matrices.

Because the Viewport only retains a weak-reference to the SceneView, calling this explicitly isn’t mandatory, but can be

used to dynamically change the list of SceneView objects whose model should be updated.

viewport_api.remove_scene_view(scene_view)

Additional Accessors#

The ViewportAPI provides a few additional read-only accessors for convenience:

usd_context_name#

viewport_api.usd_context_name => str

# Returns the name of the UsdContext the Viewport is bound to.

usd_context#

viewport_api.usd_context => omni.usd.UsdContext

# Returns the actual UsdContext the Viewport is bound to, essentially `omni.usd.get_context(self.usd_context_name)`.

stage#

viewport_api.stage => Usd.Stage

# Returns the `Usd.Stage` the ViewportAPI is bound to, essentially: `omni.usd.get_context(self.usd_context_name).get_stage()`.

projection#

viewport_api.projection=> Gf.Matrix4d

# Returns the Gf.Matrix4d of the projection. This may differ than the projection of the `Usd.Camera` based on how the Viewport is placed in the parent widget.

transform#

viewport_api.transform => Gf.Matrix4d

# Return the Gf.Matrix4d of the transform for the `Usd.Camera` in world-space.

view#

viewport_api.view => Gf.Matrix4d

# Return the Gf.Matrix4d of the inverse-transform for the `Usd.Camera` in world-space.

time#

viewport_api.time => Usd.TimeCode

# Return the Usd.TimeCode that the Viewport is using.

Space Mapping#

The ViewportAPI natively uses NDC coordinates to interop with the payloads from omni.ui.scene gestures.

To understand where these coordinates are in 3D-space for the ViewportTexture, two properties will

can be used to get a Gf.Matrix4d to convert to and from NDC-space to World-space.

origin = (0, 0, 0)

origin_screen = viewport_api.world_to_ndc.Transform(origin)

print('Origin is at {origin_screen}, in screen-space')

origin = viewport_api.ndc_to_world.Transform(origin_screen)

print('Converted back to world-space, origin is {origin}')

world_to_ndc#

viewport_api.world_to_ndc => Gf.Matrix4d

# Returns a Gf.Matrix4d to move from World/Scene space into NDC space

ndc_to_world#

viewport_api.ndc_to_world => Gf.Matrix4d

# Returns a Gf.Matrix4d to move from NDC space into World/Scene space

World queries#

While it is useful being able to move through these spaces is useful, for the most part what we’ll be more interested in using this to inspect the scene that is being rendered. What that means is that we’ll need to move from an NDC coordinate into pixel-space of our rendered image.

A quick breakdown of the three spaces in use:

The native NDC space that works with omni.ui.scene gestures can be thought of as a cartesian space centered on the rendered image.

We can easily move to a 0.0 - 1.0 uv space on the rendered image.

And finally expand that 0.0 - 1.0 uv space into pixel space.

![]()

Now that we know how to map between the ui and texture space, we can use it to convert between screen and 3D-space.

More importantly, we can use it to actually query the backing ViewportTexture about objects and locations in the scene.

request_query#

viewport_api.request_query(pixel_coordinate: (x, y), query_completed: callable) => None

# Query a pixel co-ordinate with a callback to be executed when the query completes

# Get the mouse position from within an omni.ui.scene gesture

mouse_ndc = self.sender.gesture_payload.mouse

# Convert the ndc-mouse to pixel-space in our ViewportTexture

mouse, in_viewport = viewport_api.map_ndc_to_texture_pixel(mouse_ndc)

# We know immediately if the mapping is outside the ViewportTexture

if not in_viewport:

return

# Inside the ViewportTexture, create a callback and query the 3D world

def query_completed(path, pos, *args):

if not path:

print('No object')

else:

print(f"Object '{path}' hit at {pos}")

viewport_api.request_query(mouse, query_completed)

Asynchronous waiting#

Some properties and API calls of the Viewport won’t take effect immediately after control-flow has returned to the caller. For example, setting the camera-path or resolution won’t immediately deliver frames from that Camera at the new resolution. There are two methods available to asynchrnously wait for the changes to make their way through the pipeline.

wait_for_render_settings_change#

Asynchronously wait until a render-settings change has been ansorbed by the backend. This can be useful after changing the camera, resolution, or render-product.

my_custom_product = '/Render/CutomRenderProduct'

viewport_api.render_product_path = my_custom_product

settings_changed = await viewport_api.wait_for_render_settings_change()

print('settings_changed', settings_changed)

wait_for_rendered_frames#

Asynchronously wait until a number of frames have been delivered. Default behavior is to wait for deivery of 1 frame, but the function accepts an optional argument for an additional number of frames to wait on.

await viewport_api.wait_for_rendered_frames(10)

print('Waited for 10 frames')

Capturing Viewport frames#

To access the state of the Viewport’s next frame and any AOVs it may be rendering, you can schedule a capture with the schedule_capture method.

There are a variety of default Capturing delegates in the omni.kit.widget.viewport.capture that can handle writing to disk or accesing the AOV’s buffer.

Because capture is asynchronous, it will likely not have occured when control-flow has returned to the caller of schedule_capture.

To wait until it has completed, the returned object has a wait_for_result method that can be used.

schedule_capture#

viewport_api.schedule_capture(capture_delegate: Capture): -> Capture

from omni.kit.widget.viewport.capture import FileCapture

capture = viewport_api.schedule_capture(FileCapture(image_path))

captured_aovs = await capture.wait_for_result()

if captured_aovs:

print(f'AOV "{captured_aovs[0]}" was written to "{image_path}"')

else:

print(f'No image was written to "{image_path}"')