PYTHON API

Core Functions

- omni.replicator.core.get_global_seed()

Return global seed value

- Returns

(int)seed value

- omni.replicator.core.set_global_seed(seed: int)

Set a global seed.

- Parameters

seed – Seed to use as initialization for the pseudo-random number generator. Seed is expected to be a non-negative integer.

- omni.replicator.core.new_layer(name: str = None)

Create a new authoring layer context. Use

new_layerto keep replicator changes into a contained layer. If a layer of the same name already exists, the layer will be cleared before new changes are applied.- Parameters

name – Name of the layer to be created. If ommitted, the name “Replicator” is used.

Example

>>> import omni.replicator.core as rep >>> with rep.new_layer(): >>> rep.create.cone(count=100, position=rep.distribution.uniform((-100,-100,-100),(100,100,100)))

Create

create methods are helpers to put objects onto the USD stage.

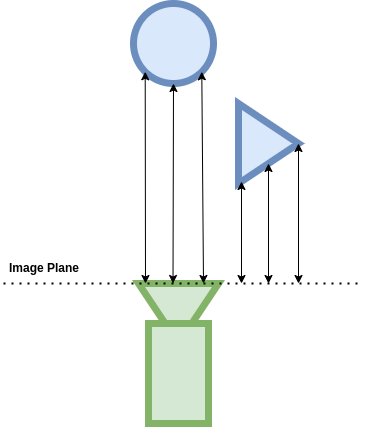

- omni.replicator.core.create.render_product(camera: Union[ReplicatorItem, str, List[str], Path, List[Path]], resolution: Tuple[int, int], force_new: bool = False, name: Optional[Union[str, List[str]]] = None) Union[str, List]

Create a render product A RenderProduct describes images or other file-like artifacts produced by a render, such as rgb (

LdrColor), normals, depth, etc. If an existing render product exists that have the same resolution and camera attached, it is returned. If no matching render product is found or ifforce_newis set to True, a new render product is created.Note: When using Viewport 2.0, viewports are not generated to draw the render product on screen. Note: Render products can utilize a large amount of VRAM. Render Products no longer in use should be destroyed.

- Parameters

camera – The camera to attach to the render product. If a list of cameras is provided, a list of render products is created.

resolution – (width, height) resolution of the render product

force_new – If

True, force creation of a new render product. IfFalse, existing render products will be re-used if currently assigned to same camera and of the same resolution. Is overriden toTrueif anameis provided.name – Optionally specify the name(s) of the render product(s). Name must produce a valid USD path. If no

nameis provided, defaults toReplicator. The render product will be created at the following path within the Session Layer:/Render/OmniverseKit/HydraTextures/<name>. If multiple cameras are provided or if a render product of the specifiednamealready exists, a_<num>suffix is added starting at_01. If specifying unique names for multiple cameras,namecan be supplied as a list of strings of the same length ascamera.

Example

>>> import omni.replicator.core as rep >>> render_product = rep.create.render_product(rep.create.camera(), resolution=(1024, 1024), name="MyRenderProduct")

- omni.replicator.core.create.register(fn: Callable[[...], Union[ReplicatorItem, Node]], override: bool = True, fn_name: Optional[str] = None) None

Register a new function under

omni.replicator.core.create. Extend the default capabilities ofomni.replicator.core.createby registering new functionality. New functions must return aReplicatorItemor anOmniGraphnode.- Parameters

fn – A function that returns a

ReplicatorItemor anOmniGraphnode.override – If

True, will override existing functions of the same name. IfFalse, an error is raised.fn_name – Optional, specify the registration name. If not specified, the function name is used.

fn_namemust only contains alphanumeric letters(a-z), numbers(0-9), or underscores(_), and cannot start with a number or contain any spaces.

Example

>>> import omni.replicator.core as rep >>> def light_cluster(num_lights: int = 10): ... lights = rep.create.light( ... light_type="sphere", ... count=num_lights, ... position=rep.distribution.uniform((-500, -500, -500), (500, 500, 500)), ... intensity=rep.distribution.uniform(10000, 20000), ... temperature=rep.distribution.uniform(1000, 10000), ... ) ... return lights >>> rep.create.register(light_cluster) >>> lights = rep.create.light_cluster(50)

Lights

- omni.replicator.core.create.light(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, light_type: str = 'Distant', color: Union[ReplicatorItem, Tuple[float, float, float]] = (1.0, 1.0, 1.0), intensity: Union[ReplicatorItem, float] = 1000.0, exposure: Union[ReplicatorItem, float] = None, temperature: Union[ReplicatorItem, float] = 6500, texture: Union[ReplicatorItem, str] = None, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a light

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value. Ignored for dome and distant light types.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value. Ignored for dome and distant light types.

rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

light_type – Light type. Select from [“cylinder”, “disk”, “distant”, “dome”, “rect”, “sphere”]

color – Light color in (R,G,B). Float values from

[0.0-1.0]intensity – Light intensity. Scales the power of the light linearly.

exposure – Scales the power of the light exponentially as a power of 2. The result is multiplied with

intensity.temperature – Color temperature in degrees Kelvin indicating the white point. Lower values are warmer, higher values are cooler. Valid range

[1000-10000].texture – Image texture to use for dome light such as an HDR (High Dynamic Range) intended for IBL (Image Based Lighting). Ignored for other light types.

count – Number of objects to create.

name – Name of the light.

parent – Optional parent prim path. The object will be created as a child of this prim.

Examples

>>> import omni.replicator.core as rep >>> distance_light = rep.create.light( ... rotation=rep.distribution.uniform((0,-180,-180), (0,180,180)), ... intensity=rep.distribution.normal(10000, 1000), ... temperature=rep.distribution.normal(6500, 1000), ... light_type="distant") >>> dome_light = rep.create.light( ... rotation=rep.distribution.uniform((0,-180,-180), (0,180,180)), ... texture=rep.distribution.choice(rep.example.TEXTURES), ... light_type="dome")

Misc

- omni.replicator.core.create.group(items: List[Union[ReplicatorItem, str, Path]], semantics: List[Tuple[str, str]] = None, name=None) ReplicatorItem

Group assets into a common node. Grouping assets makes it easier and faster to apply randomizations to multiple assets simultaneously.

- Parameters

items – Assets to be grouped together.

semantics – List of semantic type-label pairs.

name (optional) – A name for the given group node

Example

>>> import omni.replicator.core as rep >>> cones = [rep.create.cone() for _ in range(100)] >>> group = rep.create.group(cones, semantics=[("class", "cone")])

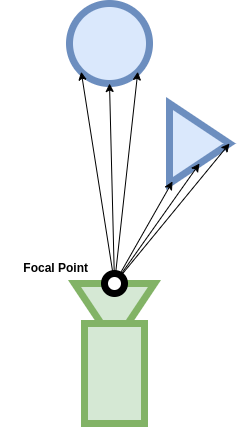

Cameras

- omni.replicator.core.create.camera(position: Union[ReplicatorItem, float, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, focal_length: Union[ReplicatorItem, float] = 24.0, focus_distance: Union[ReplicatorItem, float] = 400.0, f_stop: Union[ReplicatorItem, float] = 0.0, horizontal_aperture: Union[ReplicatorItem, float] = 20.955, horizontal_aperture_offset: Union[ReplicatorItem, float] = 0.0, vertical_aperture_offset: Union[ReplicatorItem, float] = 0.0, clipping_range: Union[ReplicatorItem, Tuple[float, float]] = (1.0, 1000000.0), projection_type: Union[ReplicatorItem, str] = 'pinhole', fisheye_nominal_width: Union[ReplicatorItem, float] = 1936.0, fisheye_nominal_height: Union[ReplicatorItem, float] = 1216.0, fisheye_optical_centre_x: Union[ReplicatorItem, float] = 970.94244, fisheye_optical_centre_y: Union[ReplicatorItem, float] = 600.37482, fisheye_max_fov: Union[ReplicatorItem, float] = 200.0, fisheye_polynomial_a: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_b: Union[ReplicatorItem, float] = 0.00245, fisheye_polynomial_c: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_d: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_e: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_f: Union[ReplicatorItem, float] = 0.0, fisheye_p0: Union[ReplicatorItem, float] = -0.00037, fisheye_p1: Union[ReplicatorItem, float] = -0.00074, fisheye_s0: Union[ReplicatorItem, float] = -0.00058, fisheye_s1: Union[ReplicatorItem, float] = -0.00022, fisheye_s2: Union[ReplicatorItem, float] = 0.00019, fisheye_s3: Union[ReplicatorItem, float] = -0.0002, cross_camera_reference_name: str = None, count: int = 1, parent: Union[ReplicatorItem, str, Path, Prim] = None, name: str = None) ReplicatorItem

Create a camera

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

focal_length – Physical focal length of the camera in units equal to

0.1 * world units.focus_distance – Distance from the camera to the focus plane in world units.

f_stop – Lens aperture. Default

0.0turns off focusing.horizontal_aperture – Horizontal aperture in units equal to

0.1 * world units. Default simulates a 35mm spherical projection aperture.horizontal_aperture_offset – Horizontal aperture offset in units equal to

0.1 * world units.vertical_aperture_offset – Vertical aperture offset in units equal to

0.1 * world units.clipping_range – (Near, Far) clipping distances of the camera in world units.

projection_type – Camera projection model. Select from [“pinhole”, “fisheye_polynomial”, “fisheyeOrthographic”, “fisheyeEquidistant”, “fisheyeEquisolid”, “fisheyeSpherical”, “fisheyeKannalaBrandtK3”, “fisheyeRadTanThinPrism”].

fisheye_nominal_width – Nominal width of fisheye lens model.

fisheye_nominal_height – Nominal height of fisheye lens model.

fisheye_optical_centre_x – Horizontal optical centre position of fisheye lens model.

fisheye_optical_centre_y – Vertical optical centre position of fisheye lens model.

fisheye_max_fov – Maximum field of view of fisheye lens model.

fisheye_polynomial_a – First polynomial coefficient of fisheye camera.

fisheye_polynomial_b – Second polynomial coefficient of fisheye camera.

fisheye_polynomial_c – Third polynomial coefficient of fisheye camera.

fisheye_polynomial_d – Fourth polynomial coefficient of fisheye camera.

fisheye_polynomial_e – Fifth polynomial coefficient of fisheye camera.

fisheye_polynomial_f – Sixth polynomial coefficient of fisheye camera.

fisheye_p0 – Distortion coefficient to calculate tangential distortion for rad tan thin prism camera.

fisheye_p1 – Distortion coefficient to calculate tangential distortion for rad tan thin prism camera.

fisheye_s0 – Distortion coefficient to calculate thin prism distortion for rad tan thin prism camera.

fisheye_s1 – Distortion coefficient to calculate thin prism distortion for rad tan thin prism camera.

fisheye_s2 – Distortion coefficient to calculate thin prism distortion for rad tan thin prism camera.

fisheye_s3 – Distortion coefficient to calculate thin prism distortion for rad tan thin prism camera.,

count – Number of objects to create.

parent – Optional parent prim path. The camera will be created as a child of this prim.

name – Name of the camera

Example

>>> import omni.replicator.core as rep >>> # Create camera >>> camera = rep.create.camera( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... rotation=(45, 45, 0), ... focus_distance=rep.distribution.normal(400.0, 100), ... f_stop=1.8, ... ) >>> # Attach camera to render product >>> render_product = rep.create.render_product(camera, resolution=(1024, 1024))

- omni.replicator.core.create.stereo_camera(stereo_baseline: Union[ReplicatorItem, float], position: Optional[Union[ReplicatorItem, float, Tuple[float]]] = None, rotation: Optional[Union[ReplicatorItem, float, Tuple[float]]] = None, look_at: Optional[Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]]] = None, look_at_up_axis: Optional[Union[ReplicatorItem, Tuple[float]]] = None, focal_length: Union[ReplicatorItem, float] = 24.0, focus_distance: Union[ReplicatorItem, float] = 400.0, f_stop: Union[ReplicatorItem, float] = 0.0, horizontal_aperture: Union[ReplicatorItem, float] = 20.955, horizontal_aperture_offset: Union[ReplicatorItem, float] = 0.0, vertical_aperture_offset: Union[ReplicatorItem, float] = 0.0, clipping_range: Union[ReplicatorItem, Tuple[float, float]] = (1.0, 1000000.0), projection_type: Union[ReplicatorItem, str] = 'pinhole', fisheye_nominal_width: Union[ReplicatorItem, float] = 1936.0, fisheye_nominal_height: Union[ReplicatorItem, float] = 1216.0, fisheye_optical_centre_x: Union[ReplicatorItem, float] = 970.94244, fisheye_optical_centre_y: Union[ReplicatorItem, float] = 600.37482, fisheye_max_fov: Union[ReplicatorItem, float] = 200.0, fisheye_polynomial_a: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_b: Union[ReplicatorItem, float] = 0.00245, fisheye_polynomial_c: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_d: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_e: Union[ReplicatorItem, float] = 0.0, fisheye_polynomial_f: Union[ReplicatorItem, float] = 0.0, fisheye_p0: Union[ReplicatorItem, float] = -0.00037, fisheye_p1: Union[ReplicatorItem, float] = -0.00074, fisheye_s0: Union[ReplicatorItem, float] = -0.00058, fisheye_s1: Union[ReplicatorItem, float] = -0.00022, fisheye_s2: Union[ReplicatorItem, float] = 0.00019, fisheye_s3: Union[ReplicatorItem, float] = -0.0002, count: int = 1, name: Optional[str] = None, parent: Optional[Union[ReplicatorItem, str, Path, Prim]] = None) ReplicatorItem

Create a stereo camera pair.

- Parameters

stereo_baseline – Distance between stereo camera pairs.

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path or as coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

focal_length – Physical focal length of the camera in units equal to

0.1 * world units.focus_distance – Distance from the camera to the focus plane in world units.

f_stop – Lens aperture. Default

0.0turns off focusing.horizontal_aperture – Horizontal aperture in units equal to

0.1 * world units. Default simulates a 35mm spherical projection aperture.horizontal_aperture_offset – Horizontal aperture offset in units equal to

0.1 * world units.vertical_aperture_offset – Vertical aperture offset in units equal to

0.1 * world units.clipping_range – (Near, Far) clipping distances of the camera in world units.

projection_type – Camera projection model. Select from [“pinhole”, “fisheye_polynomial”, “fisheyeOrthographic”, “fisheyeEquidistant”, “fisheyeEquisolid”, “fisheyeSpherical”, “fisheyeKannalaBrandtK3”, “fisheyeRadTanThinPrism”].

fisheye_nominal_width – Nominal width of fisheye lens model.

fisheye_nominal_height – Nominal height of fisheye lens model.

fisheye_optical_centre_x – Horizontal optical centre position of fisheye lens model.

fisheye_optical_centre_y – Vertical optical centre position of fisheye lens model.

fisheye_max_fov – Maximum field of view of fisheye lens model.

fisheye_polynomial_a – First component of fisheye polynomial (only valid for fisheye_polynomial projection type).

fisheye_polynomial_b – Second component of fisheye polynomial (only valid for fisheye_polynomial projection type).

fisheye_polynomial_c – Third component of fisheye polynomial (only valid for fisheye_polynomial projection type).

fisheye_polynomial_d – Fourth component of fisheye polynomial (only valid for fisheye_polynomial projection type).

fisheye_polynomial_e – Fifth component of fisheye polynomial (only valid for fisheye_polynomial projection type).

count – Number of objects to create.

name – Name of the cameras.

_Land_Rwill be appended for Left and Right cameras, respectively.parent – Optional parent prim path. The cameras will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> # Create stereo camera >>> stereo_camera_pair = rep.create.stereo_camera( ... stereo_baseline=10, ... position=(10, 10, 10), ... rotation=(45, 45, 0), ... focus_distance=rep.distribution.normal(400.0, 100), ... f_stop=1.8, ... ) >>> # Attach camera to render product >>> render_product = rep.create.render_product(stereo_camera_pair, resolution=(1024, 1024))

Materials

- omni.replicator.core.create.material_omnipbr(diffuse: Tuple[float] = None, diffuse_texture: str = None, roughness: float = None, roughness_texture: str = None, metallic: float = None, metallic_texture: str = None, specular: float = None, emissive_color: Tuple[float] = None, emissive_texture: str = None, emissive_intensity: float = 0.0, project_uvw: bool = False, semantics: List[Tuple[str, str]] = None, count: int = 1) ReplicatorItem

Create an OmniPBR Material

- Parameters

diffuse – Diffuse/albedo color in RGB colorspace

diffuse_texture – Path to diffuse texture

roughness – Material roughness in the range

[0, 1]roughness_texture – Path to roughness texture

metallic – Material metallic value in the range

[0, 1]. Typically, metallic is assigned either0.0or1.0metallic_texture – Path to metallic texture

specular – Intensity of specular reflections in the range

[0, 1]emissive_color – Color of emissive light emanating from material in RGB colorspace

emissive_texture – Path to emissive texture

emissive_intensity – Emissive intensity of the material. Setting to

0.0(default) disables emission.project_uvw – When

True, UV coordinates will be generated by projecting them from a coordinate system.semantics – Assign semantics to material

count – Number of objects to create.

Example

>>> import omni.replicator.core as rep >>> mat1 = rep.create.material_omnipbr( ... diffuse=rep.distribution.uniform((0, 0, 0), (1, 1, 1)), ... roughness=rep.distribution.uniform(0, 1), ... metallic=rep.distribution.choice([0, 1]), ... emissive_color=rep.distribution.uniform((0, 0, 0.5), (0, 0, 1)), ... emissive_intensity=rep.distribution.uniform(0, 1000), ... ) >>> mat2 = rep.create.material_omnipbr( ... diffuse_texture=rep.distribution.choice(rep.example.TEXTURES), ... roughness_texture=rep.distribution.choice(rep.example.TEXTURES), ... metallic_texture=rep.distribution.choice(rep.example.TEXTURES), ... emissive_texture=rep.distribution.choice(rep.example.TEXTURES), ... emissive_intensity=rep.distribution.uniform(0, 1000), ... ) >>> cone = rep.create.cone(material=mat1) >>> torus = rep.create.torus(material=mat2)

- omni.replicator.core.create.projection_material(proxy_prim: Union[ReplicatorItem, str, Path], semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, str, Path] = None, offset_scale: float = 0.01, input_prims: Union[ReplicatorItem, List[str]] = None, name: Optional[str] = None) ReplicatorItem

Project a texture onto a target prim.

ProjectPBRMaterialis used to facilitate these projections. The proxy prim is a prim used to control the position, rotation and scale of the projection. There can only be one proxy/projection pair, so a proxy prim can only modify a single projection. The projection will happen in the direction of the negative x-axis. This node only sets up the projection material, therep.modify.projection_materialnode should be used to update the projection itself.

- Parameters

proxy_prim – The prims which will be used to manipulate the projection.

material – Projection material to apply to the projection. If not provided, use ‘ProjectPBRMaterial’.

semantics – Semantics to apply to the defect.

offset_scale – Scale factor when extruding

target_primpoints.input_prims – The prim which will be projected on to. If using

withsyntax, this argument can be omitted.name (optional) – A name for the given projection node.

Example

>>> import omni.replicator.core as rep >>> torus = rep.create.torus() >>> cube = rep.create.cube(position=(50, 100, 0), rotation=(0, 0, 90), scale=(0.2, 0.2, 0.2)) >>> sem = [('class', 'shape')] >>> with torus: ... rep.create.projection_material(cube, sem) omni.replicator.core.create.projection_material

- omni.replicator.core.create.mdl_from_json(material_def: Dict = None, material_def_path: str = None) ReplicatorItem

Create a MDL ShaderGraph material defined in a json dictionary.

- Parameters

material_def – A dictionary object defining the MDL material graph.

material_def_path – A path to a json file to decode and generate an MDL material graph from.

Example

>>> import omni.replicator.core as rep >>> gen_mat = rep.create.mdl_from_json(material_def=rep.example.MDL_JSON_EXAMPLE) >>> cube = rep.create.cube(material=gen_mat)

Shapes

- omni.replicator.core.create.cone(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, as_mesh: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a cone

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the cone.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.as_mesh – If

False, create aUsd.Coneprim. IfTrue, create a mesh.count – Number of objects to create.

name – Name of the object.

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> cone = rep.create.cone( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "cone")], ... )

- omni.replicator.core.create.cube(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, as_mesh: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a cube

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the cube.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.as_mesh – If

False, create aUsd.Cubeprim. IfTrue, create a mesh.count – Number of objects to create.

name – Name of the object

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> cube = rep.create.cube( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "cube")], ... )

- omni.replicator.core.create.cylinder(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, as_mesh: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a cylinder

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the cylinder.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.as_mesh – If

False, create a Usd.Cylinder prim. IfTrue, create a mesh.count – Number of objects to create.

name – Name of the object

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> cylinder = rep.create.cylinder( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "cylinder")], ... )

- omni.replicator.core.create.disk(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a disk

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the disk.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.count – Number of objects to create.

name – Name of the object.

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> disk = rep.create.disk( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "disk")], ... )

- omni.replicator.core.create.plane(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a plane

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the plane.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.count – Number of objects to create.

name – Name of the object

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> plane = rep.create.plane( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "plane")], ... )

- omni.replicator.core.create.sphere(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, as_mesh: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a sphere

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the sphere.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.as_mesh – If

False, create aUsd.Sphereprim. IfTrue, create a mesh.count – Number of objects to create.

name – Name of the object.

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> sphere = rep.create.sphere( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "sphere")], ... )

- omni.replicator.core.create.torus(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, material: Union[ReplicatorItem, Prim] = None, visible: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a torus

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

pivot – Pivot that sets the center point of translate and rotate operation. Pivot values are normalized between

[-1, 1]for each axis based on the prim’s axis aligned extents.rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

material – Material to attach to the torus.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.count – Number of objects to create.

name – Name of the object

parent – Optional parent prim path. The object will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> torus = rep.create.torus( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... scale=2, ... rotation=(45, 45, 0), ... semantics=[("class", "torus")], ... )

- omni.replicator.core.create.xform(position: Union[ReplicatorItem, float, Tuple[float]] = None, scale: Union[ReplicatorItem, float, Tuple[float]] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[ReplicatorItem, Tuple[float]] = None, semantics: List[Tuple[str, str]] = None, visible: bool = True, count: int = 1, name: str = None, parent: Union[ReplicatorItem, str, Path, Prim] = None) ReplicatorItem

Create a Xform

- Parameters

position – XYZ coordinates in world space. If a single value is provided, all axes will be set to that value.

scale – Scaling factors for XYZ axes. If a single value is provided, all axes will be set to that value.

rotation – Euler angles in degrees in XYZ order. If a single value is provided, all axes will be set to that value.

look_at – Look-at target, specified either as a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – Look-at up axis of the created prim.

semantics – List of semantic type-label pairs.

visible – If

False, the prim will be invisible. This is often useful when creating prims to use as bounds with other randomizers.count – Number of objects to create.

name – Name of the object.

parent – Optional parent prim path. The xform will be created as a child of this prim.

Example

>>> import omni.replicator.core as rep >>> xform = rep.create.xform( ... position=rep.distribution.uniform((0,0,0), (100, 100, 100)), ... semantics=[("class", "thing")], ... )

USD

- omni.replicator.core.create.from_dir(dir_path: str, recursive: bool = False, path_filter: Optional[str] = None, semantics: Optional[List[Tuple[str, str]]] = None) ReplicatorItem

Create a group of assets from the USD files found in dir_path

- Parameters

dir_path – The root path to search from.

recursive – If

True, search through sub-folders.path_filter – A Regular Expression (RegEx) string to filter paths with.

semantics – List of semantic type-label pairs.

Example

>>> import omni.replicator.core as rep >>> asset_path = rep.example.ASSETS_DIR >>> asset = rep.create.from_dir(asset_path, path_filter="rocket")

- omni.replicator.core.create.from_usd(usd: str, semantics: List[Tuple[str, str]] = None, count: int = 1) ReplicatorItem

Reference a USD into the current USD stage.

- Parameters

usd – Path to a usd file (

\*.usd,\*.usdc,\*.usda)semantics – List of semantic type-label pairs.

Example

>>> import omni.replicator.core as rep >>> usd_path = rep.example.ASSETS[0] >>> asset = rep.create.from_usd(usd_path, semantics=[("class", "example")])

Get

get methods are helpers to get objects from the USD stage, either by path or by semantic label.

get.prims is very broad with its regex matching on the USD stage, so individual helper methods are provided

to narrow the search field to differnt USD types (mesh, light, etc.)

- omni.replicator.core.get.camera(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘camera’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.curve(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘curve’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.geomsubset(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘geomsubset’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.graph(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get all ‘graph’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.light(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

- Get Usd ‘light’ types based on specified constraints.

Matches types RectLight, SphereLight, CylinderLight, DiskLight, DistantLight, SphereLight

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.listener(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd listener types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.material(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd material types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.mesh(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd mesh types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.physics(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get physics/physicsscene types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.prim_at_path(path: Union[str, List[str], ReplicatorItem], name: Optional[str] = None) ReplicatorItem

Get the prim at the exact path

- Parameters

path – USD path to the desired prim. Defaults to None.

name (optional) – A name for the graph node.

- omni.replicator.core.get.prims(path_pattern: str = None, path_match: str = None, path_pattern_exclusion: str = None, prim_types: Union[str, List[str]] = None, prim_types_exclusion: Union[str, List[str]] = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, ignore_case: bool = True, name: Optional[str] = None) ReplicatorItem

Get prims based on specified constraints.

Search the stage for stage paths with matches to the specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_match – Python string matching. Faster than regex matching.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

prim_types – List of prim types to include

prim_types_exclusion – List of prim types to ignore

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

ignore_case – Case-insensitive regex matching

name (optional) – A name for the graph node.

- omni.replicator.core.get.renderproduct(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd renderproduct types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.rendervar(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd rendervar types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.scope(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘scope’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.shader(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘shader’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.shape(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

- Get Usd ‘shape’ types based on specified constraints.

Includes Capsule, Cone, Cube, Cylinder, Plane, Sphere

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.skelanimation(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘skelanimation’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.skeleton(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘skeleton’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.sound(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘sound’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.xform(path_pattern: str = None, path_pattern_exclusion: str = None, semantics: Union[List[Tuple[str, str]], Tuple[str, str]] = None, semantics_exclusion: Union[List[Tuple[str, str]], Tuple[str, str]] = None, cache_result: bool = True, name: Optional[str] = None, path_match: str = None) ReplicatorItem

Get Usd ‘xform’ types based on specified constraints.

- Parameters

path_pattern – The RegEx (Regular Expression) path pattern to match.

path_pattern_exclusion – The RegEx (Regular Expression) path pattern to ignore.

semantics – Semantic type-value pairs of semantics to include

semantics_exclusion – Semantic type-value pairs of semantics to ignore

cache_result – Run get prims a single time, then return the cached result

name (optional) – A name for the graph node.

path_match – Python string matching. Faster than regex matching.

- omni.replicator.core.get.register(fn: Callable[[...], Union[ReplicatorItem, Node]], override: bool = True, fn_name: Optional[str] = None) None

Register a new function under

omni.replicator.core.get. Extend the default capabilities ofomni.replicator.core.getby registering new functionality. New functions must return aReplicatorItemor anOmniGraphnode.- Parameters

fn – A function that returns a

ReplicatorItemor anOmniGraphnode.override – If

True, will override existing functions of the same name. IfFalse, an error is raised.fn_name – Optional, specify the registration name. If not specified, the function name is used.

fn_namemust only contains alphanumeric letters(a-z), numbers(0-9), or underscores(_), and cannot start with a number or contain any spaces.

Distribution

distribution methods are helpers set a range of values to simulate complex behavior.

- omni.replicator.core.distribution.choice(choices: List[str], weights: List[float] = None, num_samples: Union[ReplicatorItem, int] = 1, seed: Optional[int] = -1, with_replacements: bool = True, name: Optional[str] = None) ReplicatorItem

Provides sampling from a list of values

- Parameters

choices – Values in the distribution to choose from.

weights – Matching list of weights for each choice.

num_samples – The number of times to sample.

seed (optional) – A seed to use for the sampling.

with_replacements – If

True, allow re-sampling the same element. IfFalse, each element can only be sampled once. Note that in this case, the size of the elements being sampled must be larger than the sampling size. Default is True.name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

- omni.replicator.core.distribution.combine(distributions: List[Union[ReplicatorItem, Tuple[ReplicatorItem]]], name: Optional[str] = None) ReplicatorItem

Combine input from different distributions.

- Parameters

distributions – List of Replicator distribution nodes or numbers.

name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

- omni.replicator.core.distribution.log_uniform(lower: Tuple, upper: Tuple, num_samples: int = 1, seed: Optional[int] = None, name: Optional[str] = None) ReplicatorItem

Provides sampling with a log uniform distribution

- Parameters

lower – Lower end of the distribution.

upper – Upper end of the distribution.

num_samples – The number of times to sample.

seed (optional) – A seed to use for the sampling.

name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

- omni.replicator.core.distribution.normal(mean: Tuple, std: Tuple, num_samples: int = 1, seed: Optional[int] = None, name: Optional[str] = None) ReplicatorItem

Provides sampling with a normal distribution

- Parameters

mean – Average value for the distribution.

std – Standard deviation value for the distribution.

num_samples – The number of times to sample.

seed (optional) – A seed to use for the sampling.

name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

- omni.replicator.core.distribution.sequence(items: Union[List, ReplicatorItem], ordered: Optional[bool] = True, seed: Optional[int] = -1, name: Optional[str] = None) ReplicatorItem

Provides sampling sequentially

- Parameters

items – Ordered list of items to sample sequentially.

ordered – Whether to return item in order.

seed (optional) – A seed to use for the sampling.

name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

Example

>>> import omni.replicator.core as rep >>> cube = rep.create.cube(count=1) >>> with cube: ... rep.modify.pose(position=rep.distribution.sequence([(0.0, 0.0, 200.0), (0.0, 200.0, 0.0), (200.0, 0.0, 0.0)])) omni.replicator.core.distribution.sequence

- omni.replicator.core.distribution.uniform(lower: Tuple, upper: Tuple, num_samples: int = 1, seed: Optional[int] = None, name: Optional[str] = None) ReplicatorItem

Provides sampling with a uniform distribution

- Parameters

lower – Lower end of the distribution.

upper – Upper end of the distribution.

num_samples – The number of times to sample.

seed (optional) – A seed to use for the sampling.

name (optional) – A name for the given distribution. Named distributions will have their values available to the

Writer.

- omni.replicator.core.distribution.register(fn: Callable[[...], Union[ReplicatorItem, Node]], override: bool = True, fn_name: Optional[str] = None) None

Register a new function under

omni.replicator.core.distribution. Extend the default capabilities ofomni.replicator.core.distributionby registering new functionality. New functions must return aReplicatorItemor anOmniGraphnode.- Parameters

fn – A function that returns a

ReplicatorItemor anOmniGraphnode.override – If

True, will override existing functions of the same name. IfFalse, an error is raised.fn_name – Optional, specify the registration name. If not specified, the function name is used.

fn_namemust only contains alphanumeric letters(a-z), numbers(0-9), or underscores(_), and cannot start with a number or contain any spaces.

Modify

modify methods are helpers to get change objects on the USD stage.

- omni.replicator.core.modify.animation(values: Union[ReplicatorItem, List[str], List[Path], List[usdrt.Sdf.Path]], reset_timeline: bool = False, input_prims: Union[ReplicatorItem, List[str]] = None) ReplicatorItem

Modify the bound animation on a skeleton. This does not do any retargetting.

- Parameters

values – The animation to set to the skeleton. If a list of values is provided, one will be chosen at random.

reset_timeline – Reset the timeline after changing the animation.

input_prims – The skeleton to modify. If using

withsyntax, this argument can be omitted.

Example

>>> import omni.replicator.core as rep >>> from pxr import Sdf >>> person = rep.get.skeleton('/World/Worker/Worker') >>> new_anim = Sdf.Path('/World/other_anim') >>> with person: ... rep.modify.animation([new_anim]) omni.replicator.core.modify.animation

- omni.replicator.core.modify.attribute(name: str, value: Union[Any, ReplicatorItem], attribute_type: str = None, input_prims: Union[ReplicatorItem, List[str]] = None) ReplicatorItem

Modify the attribute of the prims specified in

input_prims.- Parameters

name – The name of the attribute to modify.

value – The value to set the attribute to.

attribute_type – The data type of the attribute. This parameter is required if the attribute specified does not already exist and must be created.

input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.

Example

>>> import omni.replicator.core as rep >>> sphere = rep.create.sphere(as_mesh=False) >>> with sphere: ... rep.modify.attribute("radius", rep.distribution.uniform(1, 5)) omni.replicator.core.modify.attribute

- omni.replicator.core.modify.material(value: Union[ReplicatorItem, List[str]] = None, input_prims: Union[ReplicatorItem, List[str]] = None, name: Optional[str] = None) ReplicatorItem

Modify the material bound to the prims specified in

input_prims.- Parameters

value – The material to bind to the prims. If multiple materials provided, a random one will be chosen.

input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.name (optional) – A name for the graph node.

Example

>>> import omni.replicator.core as rep >>> mat = rep.create.material_omnipbr() >>> sphere = rep.create.sphere(as_mesh=False) >>> with sphere: ... rep.modify.material(["/Replicator/Looks/OmniPBR"]) omni.replicator.core.modify.material

- omni.replicator.core.modify.pose(position: Union[ReplicatorItem, float, Tuple[float]] = None, position_x: Union[ReplicatorItem, float] = None, position_y: Union[ReplicatorItem, float] = None, position_z: Union[ReplicatorItem, float] = None, rotation: Union[ReplicatorItem, float, Tuple[float]] = None, rotation_x: Union[ReplicatorItem, float] = None, rotation_y: Union[ReplicatorItem, float] = None, rotation_z: Union[ReplicatorItem, float] = None, rotation_order: str = 'XYZ', scale: Union[ReplicatorItem, float, Tuple[float]] = None, size: Union[ReplicatorItem, float, Tuple[float]] = None, pivot: Union[ReplicatorItem, Tuple[float]] = None, look_at: Union[ReplicatorItem, str, Path, usdrt.Sdf.Path, Tuple[float, float, float], List[Union[str, Path, usdrt.Sdf.Path]]] = None, look_at_up_axis: Union[str, Tuple[float, float, float]] = None, input_prims: Union[ReplicatorItem, List[str]] = None, name: Optional[str] = None) ReplicatorItem

Modify the position, rotation, scale, and/or look-at target of the prims specified in

input_prims.- Parameters

position – XYZ coordinates in world space.

position_x – coordinates value along the x axis.

position_y – coordinates value along the y axis.

position_z – coordinates value along the z axis.

rotation – Rotation in degrees for the axes specified in

rotation_order.rotation_x – Rotation in degrees for the X axis.

rotation_y – Rotation in degrees for the Y axis.

rotation_z – Rotation in degrees for the Z axis.

rotation_order – Order of rotation. Select from [XYZ, XZY, YXZ, YZX, ZXY, ZYX]

scale – Scale factor for each of XYZ axes.

size – Desired size of the input prims. Each input prim is scaled to match the specified

sizeextents in each of the XYZ axes.pivot – Pivot that sets the center point of translate and rotate operation.

look_at – The look at target to orient towards specified as either a

ReplicatorItem, a prim path, or world coordinates. If multiple prims are set, the target point will be the mean of their positions.look_at_up_axis – The up axis used in look_at function

input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.name (optional) – A name for the graph node.

Note

positionand any of (position_x,position_y, andposition_z) cannot both be specified.rotationandlook_atcannot both be specified.sizeandscalecannot both be specified.sizeis converted to scale based on the prim’s current axis-aligned bounding box size. If a scale is already applied, it might not be able to reflect the true size of the prim.

Example

>>> import omni.replicator.core as rep >>> with rep.create.cube(): ... rep.modify.pose(position=rep.distribution.uniform((0, 0, 0), (100, 100, 100)), ... scale=rep.distribution.uniform(0.1, 10), ... look_at=(0, 0, 0)) omni.replicator.core.modify.pose

- omni.replicator.core.modify.pose_camera_relative(camera: Union[ReplicatorItem, List[str]], render_product: ReplicatorItem, distance: float, horizontal_location: float = 0, vertical_location: float = 0, input_prims=None) ReplicatorItem

Modify the positions of the prim relative to a camera.

- Parameters

camera – Camera that the prim is relative to.

horizontal_location – Horizontal location in the camera space, which is in the range

[-1, 1].vertical_location – Vertical location in the camera space, which is in the range

[-1, 1].distance – Distance from the prim to the camera.

input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.

Example

>>> import omni.replicator.core as rep >>> camera = rep.create.camera() >>> render_product = rep.create.render_product(camera, (1024, 512)) >>> with rep.create.cube(): ... rep.modify.pose_camera_relative(camera, render_product, distance=500, horizontal_location=0, vertical_location=0) omni.replicator.core.modify.pose_camera_relative

- omni.replicator.core.modify.pose_orbit(barycentre: Union[ReplicatorItem, Tuple[float, float, float], str], distance: Union[ReplicatorItem, float], azimuth: Union[ReplicatorItem, float], elevation: Union[ReplicatorItem, float], look_at_barycentre: bool = True, input_prims: Optional[Union[ReplicatorItem, List[str]]] = None) Node

Position the

input_primsin an orbit around a point.- Parameters

barycentre – The point around which to position the input prims. The barycentre can be specified as either coordinates or as prim paths. If more than one prim path is provided, the barycentre will be set to the mean of the prim centres.

distance – Distance from barycentre

azimuth – Horizontal angle (in degrees).

elevation – Vertical angle (in degrees).

look_at_centre – If

True, orient theinput_primstowards the barycentre. DefaultTrue.input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.

Example

>>> import omni.replicator.core as rep >>> cube = rep.create.cube() >>> camera = rep.create.camera() >>> with camera: ... rep.modify.pose_orbit( ... barycentre=cube, ... distance=rep.distribution.uniform(400, 500), ... azimuth=45, ... elevation=rep.distribution.uniform(-180, 180), ... ) omni.replicator.core.modify._pose_orbit

- omni.replicator.core.modify.projection_material(position: Union[ReplicatorItem, List[str]] = None, rotation: Union[ReplicatorItem, List[str]] = None, scale: Union[ReplicatorItem, List[str]] = None, texture_group: Union[ReplicatorItem, List[str]] = None, diffuse: Union[ReplicatorItem, List[str]] = None, normal: Union[ReplicatorItem, List[str]] = None, roughness: Union[ReplicatorItem, List[str]] = None, metallic: Union[ReplicatorItem, List[str]] = None, input_prims: Union[ReplicatorItem, List[str]] = None, name: Optional[str] = None) Node

Modify values on a projection and update the transform via updates to the proxy prim.

The proxy prims’ transforms can be modified outside this function and then this function can be used to update the projection position, scale, and rotation if not manually provided.

- Parameters

position – Manually update the position of the projection, this will override the position from the proxy.

rotation – Manually update the rotation of the projection, this will override the rotation from the proxy.

scale – Manually update the scale of the projection, this will override the scale from the proxy.

texture_group – Update the diffuse, normal, roughness, and/or metallic texture simultaniously. Use where there are diffuse, normal, roughness, and/or metallic textures in a set. If using this arg, the diffuse, normal, roughness and/or metallic args should be set to the suffix used to denote each type.

diffuse – Update the diffuse texture used on the projection material. Will not change if not provided.

normal – Update the normal texture used on the projection material. Will not change if not provided.

roughness – Update the roughness texture used on the projection material. Will not change if not provided.

metallic – Update the metallic texture used on the projection material. Will not change if not provided.

input_prims – The projection prim to modify. If using

withsyntax, this argument can be omitted.name (optional) – A name for the graph node.

Example

>>> import omni.replicator.core as rep >>> import os >>> torus = rep.create.torus() >>> cube = rep.create.cube(position=(50, 100, 0), rotation=(0, 0, 90), scale=(0.2, 0.2, 0.2)) >>> sem = [('class', 'shape')] >>> with torus: ... projection = rep.create.projection_material(cube, sem) >>> with projection: ... rep.modify.projection_material(diffuse=os.path.join(rep.example.TEXTURES_DIR, "smiley_albedo.png")) omni.replicator.core.modify.projection_material

- omni.replicator.core.modify.semantics(semantics: List[Union[str, Tuple[str, str]]] = None, input_prims: Union[ReplicatorItem, List[str]] = None, mode: str = 'add') ReplicatorItem

Add semantics to the target prims

- Parameters

semantics –

TYPE,VALUEpairs of semantic labels to include on the prim. (Ex: (‘class’, ‘sphere’))input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.mode – Semantics modification mode. Select from [

add,replace,clear]. Inaddmode, semantic labels are added to the prim, labels with the sameTYPE:VALUEwill be skipped. (eg.class:car, class:sedan->class:car, class:sedan, class:automobile). Inreplacemode, the semanticsVALUEspecified will replace any existing value of the same semanticTYPE(eg.class:car, class:sedan, subclass:emergency->class:automobile, subclass:emergency). Inclearmode, ALL existing semantics are cleared before adding the specified semantics. (eg.class:car, subclass:emergency, region:usa->class:automobile).

Example

>>> import omni.replicator.core as rep >>> with rep.create.sphere(): ... rep.modify.semantics([("class", "sphere")]) omni.replicator.core.modify.semantics

- omni.replicator.core.modify.variant(name: str, value: Union[List[str], ReplicatorItem], input_prims: Union[ReplicatorItem, List[str]] = None) ReplicatorItem

Modify the variant of the prims specified in

input_prims.- Parameters

name – The name of the variant set to modify.

value – The value to set the variant to.

input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.

Example

>>> import os >>> import omni.replicator.core as rep >>> sphere = rep.create.from_usd(os.path.join(rep.example.ASSETS_DIR, "variant.usd")) >>> with rep.trigger.on_frame(max_execs=10): ... with sphere: ... rep.modify.variant("colorVariant", rep.distribution.choice(["red", "green", "blue"])) omni.replicator.core.modify.variant

- omni.replicator.core.modify.visibility(value: Union[ReplicatorItem, List[bool], bool] = None, input_prims: Union[ReplicatorItem, List[str]] = None, name: Optional[str] = None) ReplicatorItem

Modify the visibility of prims.

- Parameters

value – True, False. Or a list of

boolsfor each prim to be modified, or a Replicator Distribution.input_prims – The prims to be modified. If using

withsyntax, this argument can be omitted.name (optional) – A name for the graph node.

Example

>>> import omni.replicator.core as rep >>> sphere = rep.create.sphere(position=(100, 0, 100)) >>> with sphere: ... rep.modify.visibility(False) omni.replicator.core.modify.visibility >>> with rep.trigger.on_frame(max_execs=10): ... with sphere: ... rep.modify.visibility(rep.distribution.sequence([True, False])) omni.replicator.core.modify.visibility

- omni.replicator.core.modify.register(fn: Callable[[...], Union[ReplicatorItem, Node]], override: bool = True, fn_name: Optional[str] = None) None

Register a new function under

omni.replicator.core.modify. Extend the default capabilities ofomni.replicator.core.modifyby registering new functionality. New functions must return aReplicatorItemor anOmniGraphnode.- Parameters

fn – A function that returns a

ReplicatorItemor anOmniGraphnode.override – If

True, will override existing functions of the same name. IfFalse, an error is raised.fn_name – Optional, specify the registration name. If not specified, the function name is used.

fn_namemust only contains alphanumeric letters(a-z), numbers(0-9), or underscores(_), and cannot start with a number or contain any spaces.

Time

- omni.replicator.core.modify.time(value: Union[float, ReplicatorItem]) ReplicatorItem

Set the timeline time value (in seconds).

- Parameters

value – The value to set the time to.

Example

>>> import omni.replicator.core as rep >>> with rep.trigger.on_frame(max_execs=10): ... rep.modify.time(rep.distribution.uniform(0, 500)) omni.replicator.core.modify.time