Rendering Basics

Selecting a Renderer

In Apps based on Omniverse Kit, there is usually an option to select between the following renderers:

RTX - Real-Time

RTX – Interactive (Path Tracing)

RTX – Accurate (Iray)

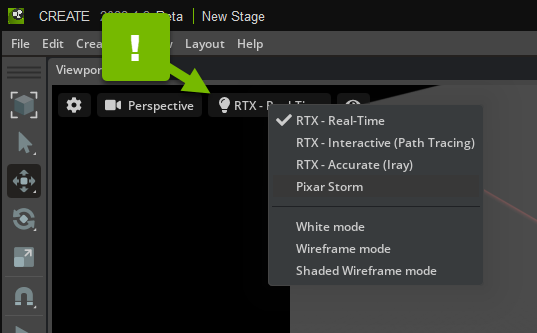

In Omniverse USD Composer, for example, this is available as a drop-down menu in the Viewport:

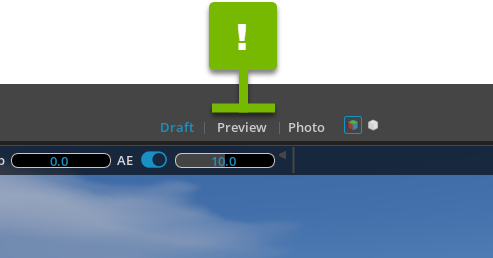

In Omniverse USD Presenter, you can select between Draft, Preview, and Photo modes:

Draft uses the RTX - Real-Time renderer, while Preview and Photo use the RTX – Interactive (Path Tracing) renderer. Each mode modifies the renderer’s settings to balance the quality for the expected experience.

Render Settings

Refer to Render Settings Overview for details.

Multi-GPU Rendering

Omniverse RTX Renderer supports multi-GPU rendering.

To enable it, you must have a system with multiple NVIDIA RTX-enabled GPUs. While the GPUs do not need to be identical, uneven GPU memory capacity and performance will result in suboptimal utilization.

When you use GPUs with different memory capacities, the GPU with the lowest capacity becomes a bottleneck, preventing you from loading a scene that would fit on the memory of the higher-capacity GPU. In such cases, you may have to remove or disable the low-capacity GPU to avoid this limitation. Similarly, a GPU with lower performance will hold back other GPUs. This will be addressed in a future release with an automatic load-balancing algorithm.

Note

SLI mode must be globally disabled in the NVIDIA control panel for multi-GPU to work. SLI mode uses a single GPU. This will be addressed in future releases.

To enable multi-GPU, launch Omniverse Kit with the following argument:

`--/renderer/multiGpu/enabled=true`

To set the maximum number of GPUs, add the following argument:

`--/renderer/multiGpu/maxGpuCount`

Note

GPU devices that don’t support ray tracing are skipped automatically.

Multi-GPU Rendering with RTX – Interactive (Path Tracing)

Refer to multi-GPU for path tracing for details.

Note

Automatic load balancing for path tracing isn’t yet available; it’s coming soon. For now, maximize your utilization of multiple GPUs by opening nvidia-smi dmon, or the utilization graphs in the NVIDIA control panel, and manually adjust the GPU 0 Weight.

GPU 0 Weight controls how many pixels are assigned to the first GPU, called GPU 0. Assuming all other GPUs have equal distribution, a weight of one, RTX – Interactive (Path Tracing) uses GPU 0’s weight value to proportionately distribute pixels across the GPUs. So, if GPU 0’s weight is one, like all other GPUs, RTX – Interactive (Path Tracing) distributes pixels evenly across the GPUs.

Aside from rendering pixels, GPU 0 also performs other tasks, such as:

Sample aggregation

Denoising

Post processing

UI rendering

So, to utilize the most of your GPUs, set a lower weight distribution for GPU 0. Specifically, a GPU 0 weight of between 0.65 and 0.8 seems to provide optimal utilization.

On top of setting your GPU 0 Weight, you can increase the number of samples per pixel per frame in Path Tracing settings or with the --/rtx/pathtracing/spp argument.

Multi-GPU Rendering with RTX - Real-Time

Refer to multi-GPU for real time for details.

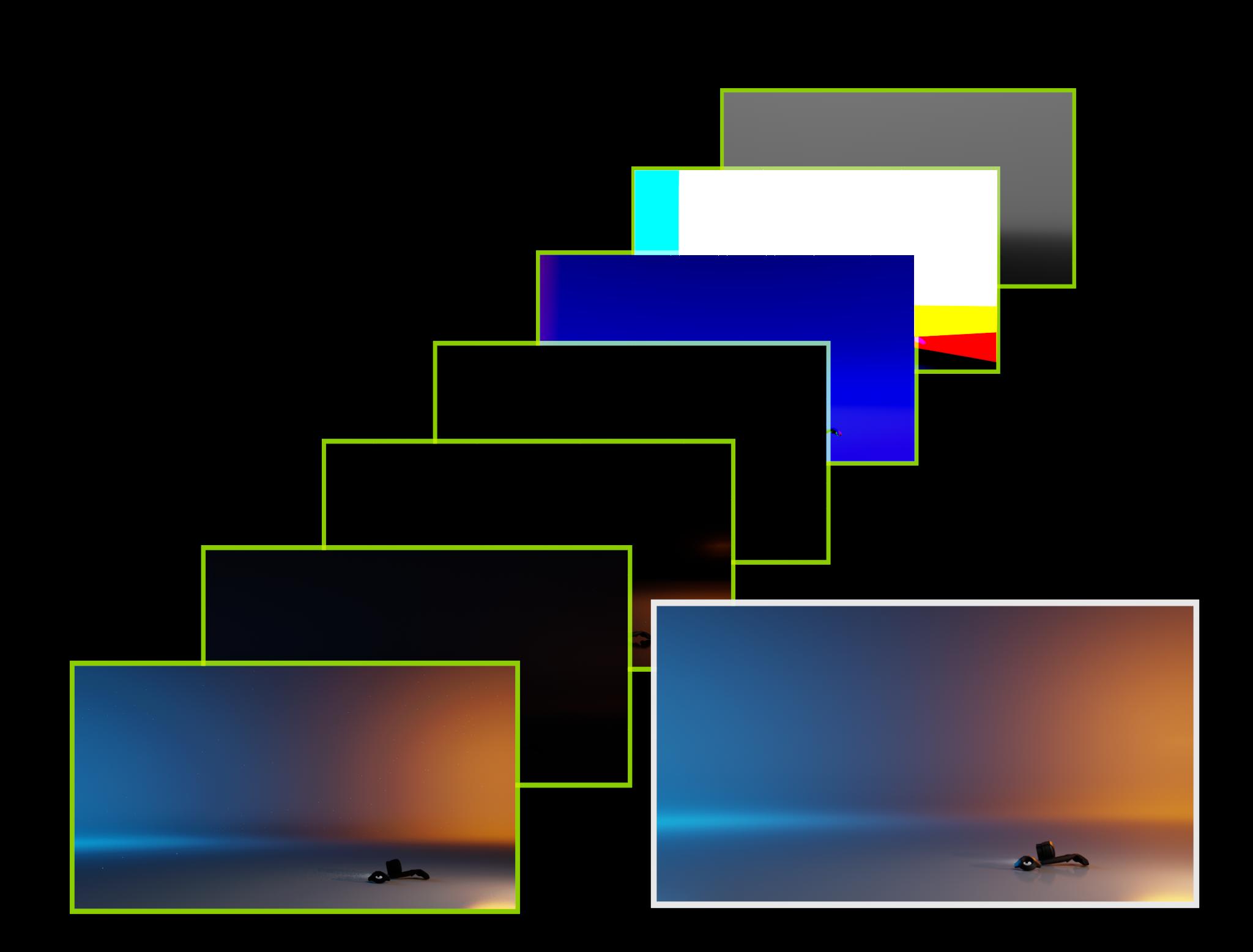

AOVs

Arbitrary Output Variables (AOVs) are data known to the renderer and used to compute illumination. Typically, AOVs contain decomposed lighting information such as:

Direction and indirect illumination

Reflections and refractions

Objects with self illumination

But they can also contain geometric and scene information, such as:

Surface position in space

Orientation of normals

Depth from camera.

As the final image is computed, the intermediate information used during rendering can optionally be written to disk. Having these extra images can be hugely beneficial, as they provide additional opportunities to modify the final image during compositing and additional insights through 2D analysis. The auxiliary images, called “passes” in Omniverse and “Render Products” in USD, are basically just named outputs. The AOV data used by the renderer is referred to as a “Render Variable” and defines what is written for each pass.

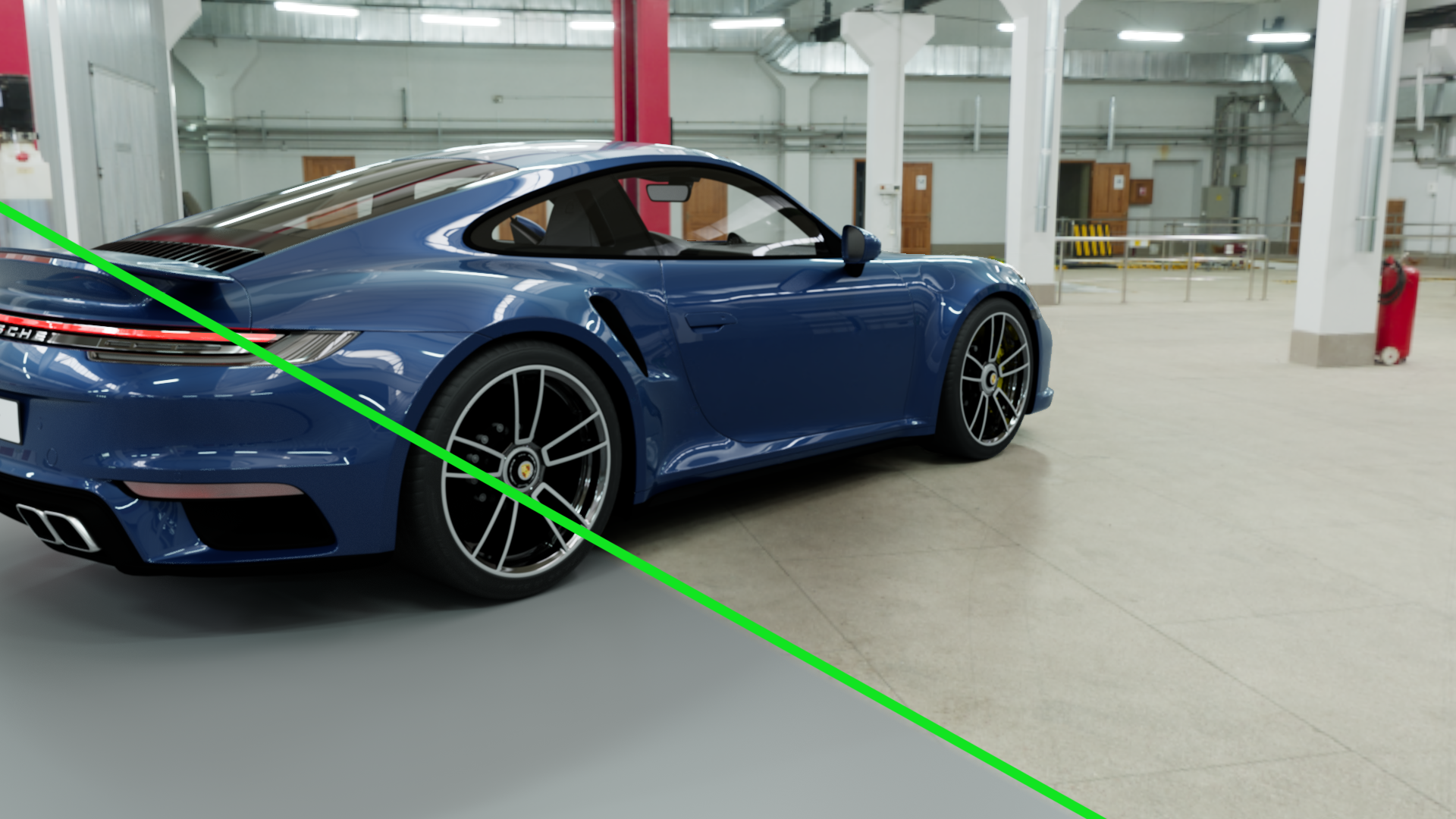

Matte Objects

A Matte object is an invisible object that still receive shadow and secondary illumination effects such as reflections or global illumination. This is effect is helpful for rendering shadows and reflections into your scene through a backplate or domelight.