WRAPP Introduction#

Overview#

WRAPP provides a command line tool helping with asset packaging and publishing operations for assets stored in S3 buckets, Nucleus servers, file systems or any storage available through a service implementing the Storage API in a decentralized way. It encourages a structured workflow for defining the content of an asset package, and methods to publish and consume those packages in a version-safe manner. WRAPP does not require a whole-sale shift of your workflow, but instead helps you to migrate to a structured, version managed workflow.

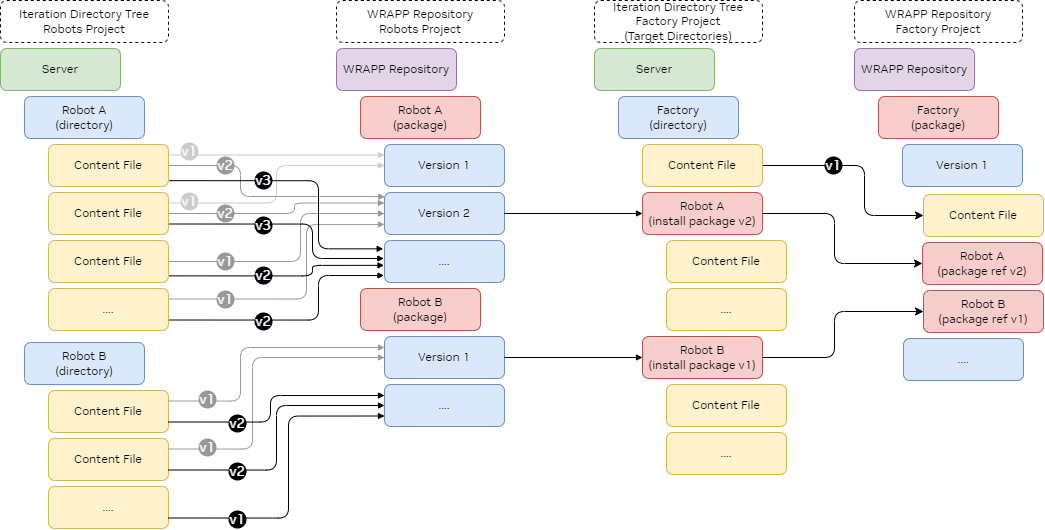

Below is a diagram illustrating the data flow with generic content files using WRAPP. As a new package is created, that specific content is stored within the package version in the repository. This package can then be installed in new locations as needed.

Key points and Assumptions#

WRAPP does not force you into a defined directory structure, it allows the user to define the package structure.

WRAPP does not modify your files or reference paths in your files. It is assumed, if you want to have your reference versions managed by WRAPP, that the references are all relative within the cataloged directory.

WRAPP allows for multiple repositories on a single server or across multiple Nucleus servers or S3 buckets.

WRAPP allows you to aggregate packages from multiple repositories into “target” directories, and even allows for package dependencies across repositories.

WRAPP currently supports repositories on S3 buckets, local file systems, Nucleus servers and any storage available through a service implementing the Storage API.

Running a WRAPP write operation concurrent with any other WRAPP operations on the same data will lead to undefined behaviour.